Information Technology Reference

In-Depth Information

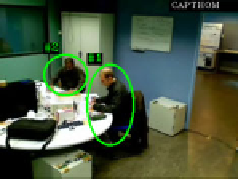

Fig. 1.

Illustration of tracking result with a partial occlusion. First row: input images

with interest points associated with each object, second row: tracking result.

In order to reduce the search space of classifiers localizing regions of interest in

the image, we added a change detection step based on background subtraction.

We chose to model each pixel in the background by a single Gaussian distribu-

tion. The detection process is then achieved through a simple probability density

function thresholding. This simple model presents a good compromise between

detection quality, computation time and memory requirements [9,10]. The back-

ground model is updated at three different levels: the pixel level updating each

pixel with a temporal filter allowing to consider long time variations of the back-

ground, the image level to deal with global and sudden variations and the object

level to deal with the entrance or the removal of static objects. This method is

called

Viola

[8]+

BS

afterwards.

We finally developped a method using additionally temporal information. We

propose a method using advantages of tools classically dedicated to object de-

tection in still images in a video analysis framework. We use video analysis to

interpret the content of a scene without any assumption while objects nature

is determined by statistical tools derived from object detection in images. We

first use background subtraction to detect objects of interest. As each connected

component detected potentially corresponds to one person, each blob is indepen-

dently tracked. Each tracked object is characterized by a set of points of interest.

These points are tracked, frame by frame. The position of these points, regarding

connected components, enables to match tracked objects with detected blobs.

The tracking of points of interest is carried out with the pyramidal implementa-

tion of the Lucas and Kanade tracker [11,12]. The nature of these tracked objects

is then determined using the previously described object recognition method in

the video analysis framework. Figure 1 presents an example of tracking result

with partial occlusion. This method is called

CAPTHOM

in the following.

For more information about the three considered methods, the interested

reader can refer to [13].

Search WWH ::

Custom Search