Biology Reference

In-Depth Information

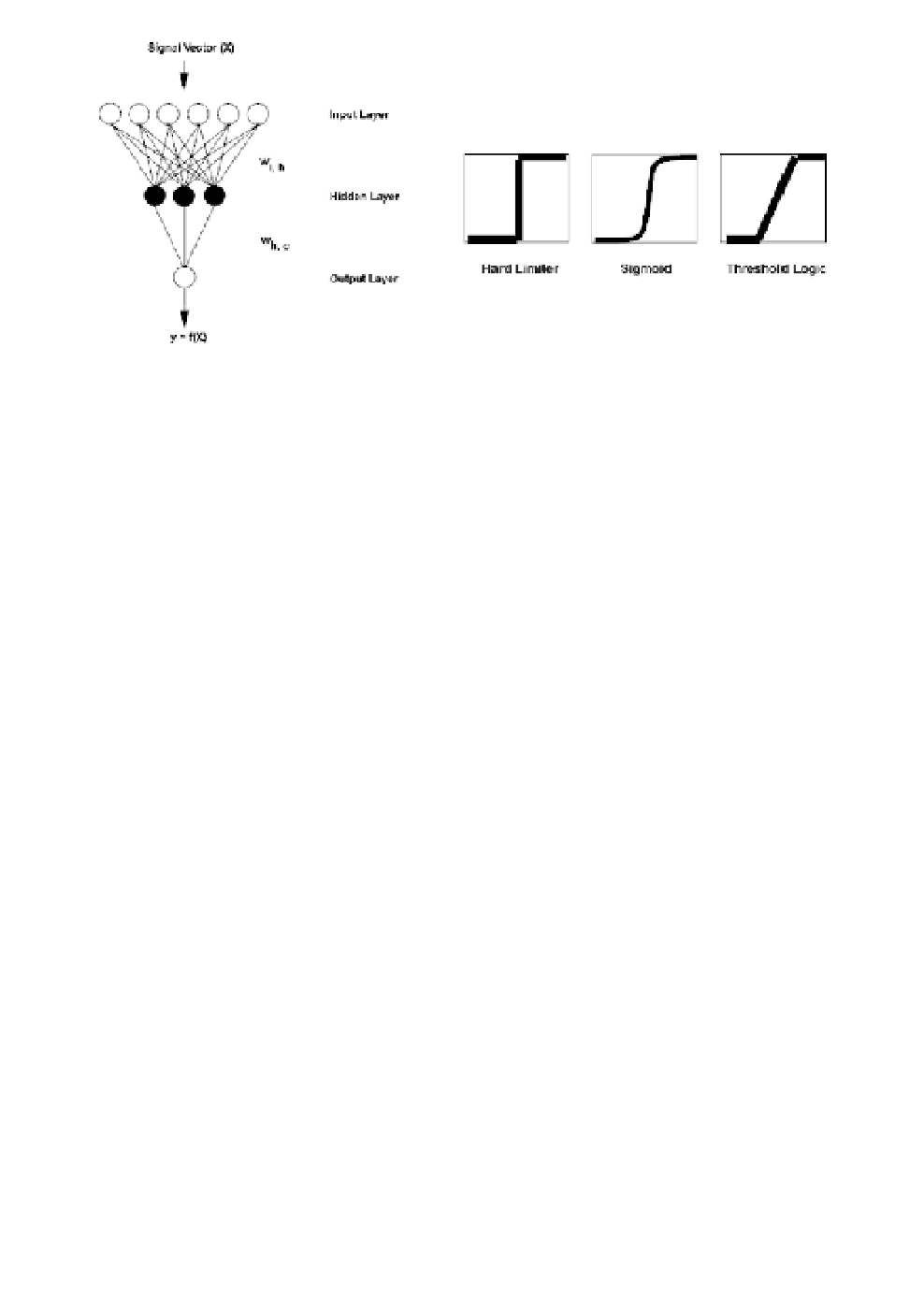

Fig. 2.

is a schematic representation of a feed-forward MLP. Compu-

tational nodes (neurons) are represented as circles with weighted inputs and

output shown as arrows. Also shown, are the three common types of non-

linearity that can be used by the neurons to determine output: hard limiter,

sigmoid and threshold.

architecture, the multiple layer perceptron (MLP) (Fig. 2), neurons

are classified into three types: 1)

input neurons

, responsible for receiv-

ing and passing the signal vector for mathematical processing; 2)

hid-

den neurons

, in which the information received from the input layer

undergoes further processing; and 3)

output neurons

, which produce

the system's output or result. The neurons are arranged into layers

with corresponding names.

Training the ANN.

The network can be trained using either of

two types of learning techniques:

supervised learning

or

unsupervised

learning

. In the supervised learning paradigm, the system is presented

with a set of input vectors that are arbitrarily matched or paired to a

set of corresponding output vectors. Once the learning process is

over, the trained ANN generates outputs to categorize or approxi-

mate, depending on the nature of the task, any given new input vec-

tors. In this type of learning, the ANN is trained with a “teacher.”

Figure 1(B) shows the basic scheme used for this type of learning. In

the unsupervised learning paradigm (training “without a teacher”),

the ANN is presented with only input vectors. The ANN then

becomes tuned to the statistical regularities in the input data and