Hardware Reference

In-Depth Information

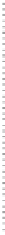

To better understand the rejection strategy, consider the example illustrated in Figure

9.13, where secondary deadlines are drawn with dashed arrows.

As can be easily

verified, before the arrival of

J

1

the task set

{

J

2

,

J

3

,

J

4

,

J

5

}

is strictly feasible.

V

i

LE

i

i

J

1

10

−1

0

J

2

6

1

0

J

3

3

2

1

J

4

8

1

0

J

2

4

3

6

0

2

4

6

8

10

12

14

16

18

20

Figure 9.13

Example of overload in a task set with deadline tolerances.

At time

t

=4, when

J

1

arrives, an overload occurs because both

J

3

and

J

5

would ter-

minate after their secondary deadline. The least value task able to resolve the overload

is

J

2

. In fact,

J

5

, that has the smallest value, cannot be rejected because, having a long

primary deadline, it would not advance the execution of

J

3

. Also, rejecting

J

3

would

not solve the overload, since its residual computation time is not sufficient to advance

the completion of

J

5

before the deadline.

A more efficient rejection strategy could consider rejecting more than one task to

minimize the cumulative value of the rejected tasks. For example, rejecting

J

3

and

J

5

is better than rejecting

J

2

. However, minimizing the value of the rejected tasks

requires a combinatorial search that would be too expensive to be performed online

for large task sets.

To take advantage of early completions and reduce the pessimism of the acceptance

test, some algorithms use an online reclaiming mechanism that exploits the saved time

to possibly recover previously rejected tasks. For example, in RED, a rejected task

is not removed from the system, but it is temporarily parked in a

Reject Queue

, with

the hope that it can be recovered due to some early completion. If

δ

is the time saved

by the running task, then all the residual laxities will increase by

δ

, and some of the

rejected tasks may be recovered based on their value.

Search WWH ::

Custom Search