Hardware Reference

In-Depth Information

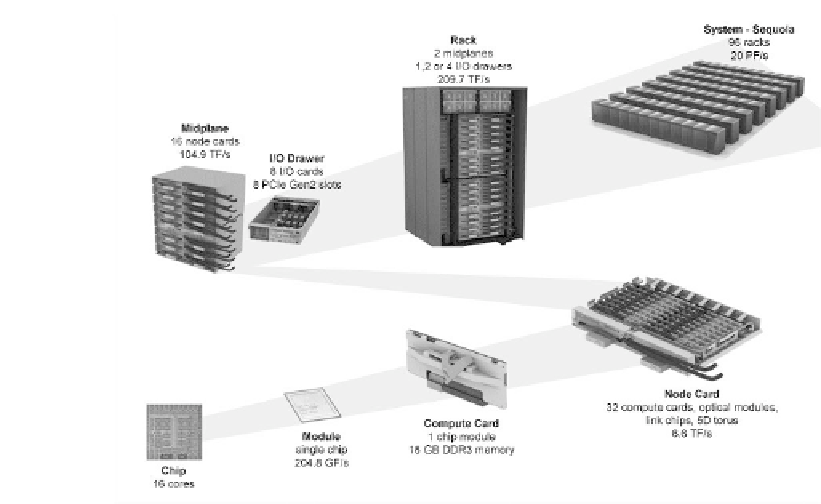

FIGURE 5.1 (See color insert): A pictorial representation of the hardware

packaging hierarchy of the BG/Q scaling architecture. The smallest configu-

ration could be a portion of a midplane and a single I/O card. The Sequoia

system is composed of 96 racks each with one I/O drawer containing eight

I/O cards (768 I/O nodes total).

a full Red Hat Enterprise Linux-based kernel (the Lustre client software is

run at this level). Compute nodes are connected to other compute nodes by

the 5D torus network; but I/O nodes perform all I/O requests on behalf of

compute nodes. These compute nodes \function ship" I/O requests to their

designated I/O node. I/O nodes are the only connection to the \outside world"

(at LLNL, this is by means of a PCIe IB adapter card to a QDR Infiniband

network). Further, I/O nodes number only a fraction of the compute nodes

(1:32, 1:64, 1:128). For example, on Sequoia, one I/O node is paired to 128

compute nodes. Compute nodes are located inside the rack, and I/O nodes are

housed in drawers external to the main rack (usually). And finally, compute

nodes are water-cooled, and I/O nodes are air-cooled.

When LC's initial BG/L system arrived at LLNL, LC was already commit-

ted to Lustre. This was the first system from IBM where they ran the Lustre

file system instead of GPFS, and also the first IBM system to run Lustre.

The first great challenge was to port the Lustre client to the BG/L I/O node.

The second great challenge was to deal with the performance ramifications of

the BG/L design: (1) at a greater scale where an application would likely be

accessing more files (likely many more); (2) though the BG/L I/O node was