Hardware Reference

In-Depth Information

computing, data analysis, and other post data acquisition services to support

the experimental facilities.

Recently, formal user requirement reviews [6] have identified a broad range

of storage needs from a ratio of N compute notes to one file (or shared file)

I/O demanding a multi-terabyte-sized file to a ratio of N compute node to

N files (or file-per-process) I/O demanding millions of files per computational

job. Users are interested in checkpointing to improve application resiliency

for a more complex and high-scale computing and storage environment, and

to enable running what would be a very long job in many small jobs using

checkpoints to continue from a previous job.

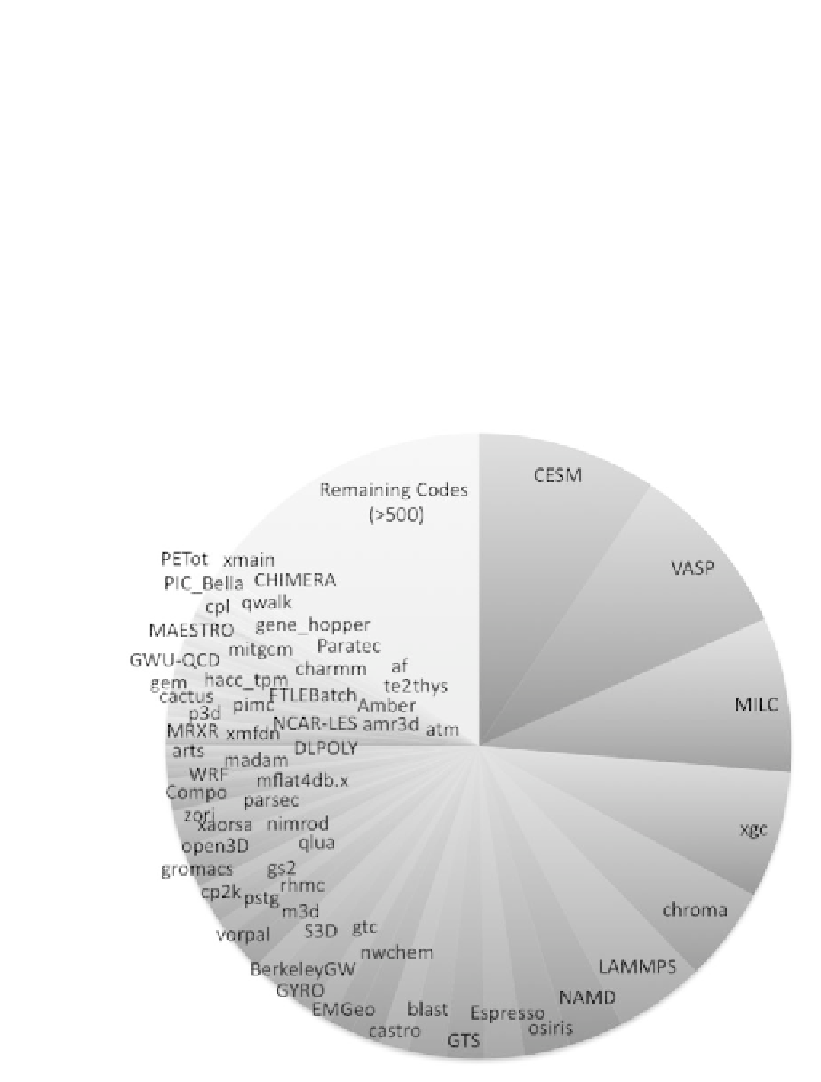

In preparation for helping science projects ready their applications for

Exascale environments, NERSC user services compiled a chart of the type

and quantity of codes running on NERSC systems (see Figure 2.4. The chart

shows the broad range of codes that have diverse I/O workload needs as well.

FIGURE 2.4: A pie chart of science applications for 2012 that were run at

NERSC. [Image courtesy of Katie Antypas (NERSC).]