Hardware Reference

In-Depth Information

18

16.8

16

14

12

10

8

6

4.23

3.66

4

1.86

2

1.14

0

Cielo

Sequoia

Red Sky UC

Palmetto

Dawn

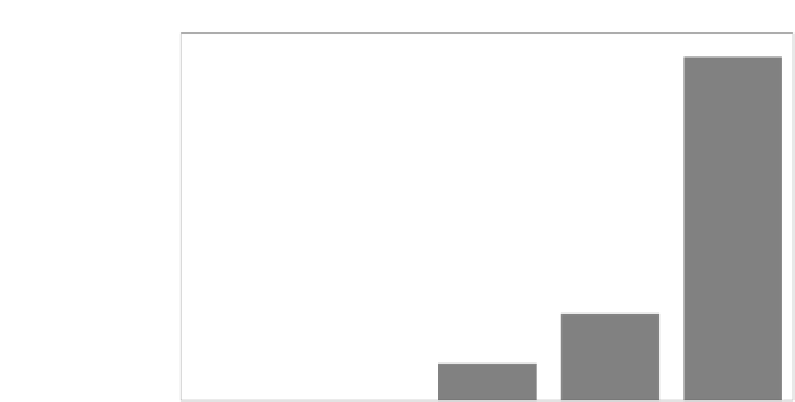

FIGURE 34.5: The number of disks installed in each system per teraFLOP of

compute power, by machine.

Notably, Dawn uses more than one fth of its power for storage. Dawn's

storage system contains a comparatively large number (8,400) of small, high-

RPM disks to form its file system. In comparison, Red Sky uses only 1,500

slower disks, even though it is has comparable compute performance. Fig-

ure 34.5 shows that, per TFLOP of compute capacity, Dawn has a large num-

ber of disks installed.

Dawn is included as an indication of the aforementioned \inection points"

to come. In June 2009, when Dawn was introduced to the TOP500 list, it

was the largest known Blue Gene machine being run outside of Lawrence

Livermore National Laboratory with Lustre. The other Blue Gene/P systems

in the Top 10 ran IBM's GPFS, with Intrepid using 7,680 disks [2], a very

similar number to Dawn's 8,400. Livermore was already experienced in running

Lustre against its 212,992-core Blue Gene/L, where it saw disk accesses similar

to random I/O even when giving sequential workloads from each core [7].

Extra disks (with high-rotation speed for reduced latency) provided more

performance under this undesirable access pattern. It seems this performance

problem has been solved, as Sequoia (also shown in Figure 34.5) has a much

lower disks-to-teraFLOP ratio [31].

The data strongly show that storage systems consume much less power

than compute nodes. Figure 34.5 shows the power use data for each machine,

normalized by the LINPACK [14] score for each platform. The proportion of

power consumed by storage is remarkably similar among the machines sam-

pled (excepting Dawn), between 0.17 and 0.31 kW/TFLOPS. In comparison,