Hardware Reference

In-Depth Information

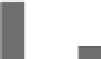

500

Graph truncated: 945.7 TiB

Climate user A

All others

400

300

200

100

0

Read

Write

Read

Write

Read

Write

Small jobs

(up to 4K procs)

Medium jobs

(up to 16K procs)

Large jobs

(up to 160K procs)

FIGURE 27.3: Total amount of data read and written by Darshan-

instrumented jobs in each partition size category on Intrepid.

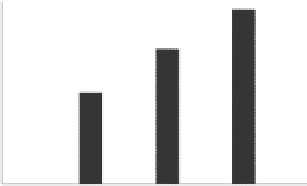

100 %

Used at least 1 file per process

Used MPI-IO

80 %

60 %

40 %

20 %

0 %

Small jobs

(up to 4K procs)

Medium jobs

(up to 16K procs)

Large jobs

(up to 160K procs)

FIGURE 27.4: Prevalence of key I/O characteristics in each partition size

category on Intrepid.

as having a file-per-process access pattern if it opened at least N files during

execution, where N is the number of MPI processes. Such jobs account for

31% of all core hours in the small job size category, but they do not appear

at all in the large job size category. Another job was defined as using MPI-

IO if it opened at least one file using

MPIFileopen()

. In contrast to the

file-per-process usage pattern, MPI-IO usage increases with job scale, going

from 50% for small jobs up to 96% for large jobs. The decline in file-per-

process access patterns and the increase in MPI-IO usage suggest that large-

scale applications are using more advanced I/O strategies in order to scale

effectively and simplify data management.

27.3 Conclusion

The Darshan I/O characterization tool has demonstrated that it is possible

to instrument leadership-class production applications with negligible over-

head. Since its initial development in 2009 [4], it has been in production on