Hardware Reference

In-Depth Information

2500

Category: medium jobs

(up to 16K procs)

31% of core-hours

2000

Category: small jobs

(up to 4K procs)

28.5% of core-hours

Category: large jobs

(up to 160K procs)

40.5% of core-hours

1500

1000

500

0

PARTITION SIZE

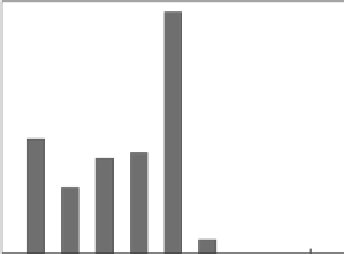

FIGURE 27.2: Histogram of the number of core hours consumed by Darshan-

instrumented jobs in each partition size on Intrepid. The histogram bins are

further categorized into small, medium, and large sizes for subsequent analysis.

data collected on the ALCF Intrepid Blue Gene/P system. Intrepid is a 557-

teraflop system containing 163,840 compute cores, 80 TB of RAM, and 7.6

PB of storage. More information about Intrepid and its I/O subsystem can be

found in Chapter 4. Darshan has been used to automatically instrument MPI

applications on Intrepid since January 2010. In this case study, five months

of Darshan log files are analyzed from January 1, 2013 to May 30, 2013.

Darshan instrumented 13,613 production jobs from 117 unique users over this

time period.

The jobs instrumented by Darshan in the first five months of 2013 ranged

in size from 1 process to 163,840 processes, offering an opportunity to ob-

serve how I/O behavior varies across application scales. Figure 27.2 shows a

histogram of the number of core hours consumed by Darshan-instrumented

jobs in each of the 9 available partition sizes on Intrepid. The most popular

partition size in terms of core-hour consumption contains 32,768 cores. The

jobs can be split into three comparably sized, broader categories for further

analysis; however, 28.5% of all core hours were in partitions of size 4,096 cores

or smaller, 31% of all core hours were in partitions of size 8,192 or 16,384, and

40.5% of all core hours were in partitions of size 32,768 or larger.

Figure 27.3 shows the total amount of data read and written by jobs in each

partition size category. All three categories are dominated by write activity

with one notable exception: the small job size category is dominated by a single

climate application labeled as \Climate user A." This application accounted

for a total of 776.5 TB of read activity and 31.1 TB of write over the course of

the study by reading as much as 4.8 TB of netCDF data in each job instance.

The other notable trend evident in Figure 27.3 is that smaller jobs accounted

for a larger fraction of the I/O usage on the system than larger jobs.

Figure 27.4 illustrates the prevalence of two key I/O characteristics across

job size categories. The first is file-per-process file usage. A job was defined