Hardware Reference

In-Depth Information

Group(0(

Group(1(

Group(2(

Group(3(

P

0(

P

1(

P

2(

P

3(

P

4(

P

5(

P

6(

P

7(

P

8(

P

9(

P

10(

P

11(

P

12(

P

13(

P

14(

P

15(

Aggregator(0((on(P

0

)(

Aggregator(1((on(P

4

)(

Aggregator(2((on(P

8

)(

Aggregator(3((on(P

12

)(

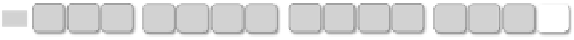

Interconnec(on)Network)

OST

0(

OST

1(

OST

2(

OST

3(

Metadata)

file)

Subfile)0)

Subfile)1)

Subfile)2)

Subfile)3)

P

0(

P

1(

P

2(

P

3(

b)(Brigade(mode(

a)(All>to>one(mode(

P

0(

P

1(

P

2(

P

3(

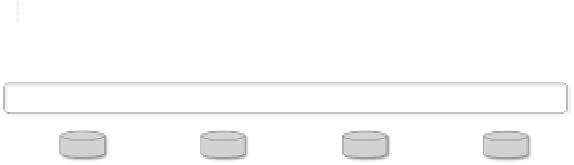

FIGURE22.1:Group-basedhierarchicalI/OcontrolforADIOS.Thishierar-

chicalcontrolenablesADIOStoecientlywriteoutsmalldatachunksfrom

S3D.

22.2.4 Aggregation and Subfiling

There are two levels of data aggregation underneath ADIOS write calls.

This is intended to make the final data as large as possible when being flushed

to disk, and as a result, expensive disk seeks can be reduced. At the first level,

data are aggregated in memory within a single MPI processor among all vari-

ables output by the adios write statement, i.e., a write-behind strategy. For

the example below, variable NX, NY and temperature in the adios write state-

ment will all be copied to ADIOS internal buffer, the maximum size of which

can be configured through the ADIOS XML file, instead of being flushed out

to disk. This is a well-known technique being used by other I/O libraries,

such as IOBUF and the FUSE file system. Meanwhile, the second level of

aggregation occurs between a sub-set of processors. This is to further deal

with the situation that each processor has a relatively small amount of data

to output (after the first-level aggregation). An good example is combustion

S3D code [2, 3]. In a typical 96,000-core S3D run on JaguarPF, each pro-

cessor outputs under 2 MB. Clearly, in this case, many small writes to disk

hurt I/O performance. One can argue that, to make data further larger, using

MPI collectives to exchange bulk data between processors can be costly. Here

we postulate four reasons in favor of aggregation techniques. (1) Interconnect