Hardware Reference

In-Depth Information

180

160

140

120

100

80

60

40

Stripe Count = 1

Stripe Count = 2

20

0

0

1000

2000

3000

4000

5000

6000

# of Write Hosts

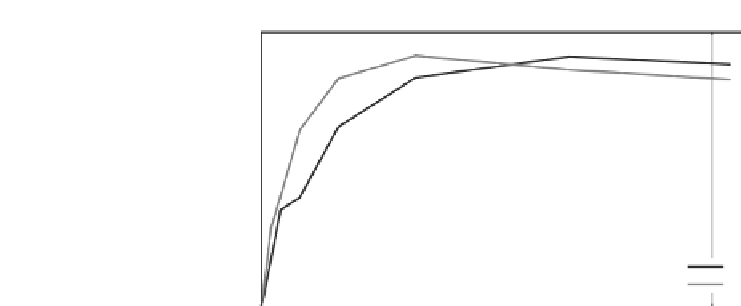

FIGURE7.4:Rawwriteperformancecharacteristicsofalarge,parallelLus-

trelesystem.ResultsmeasuredusingoneI/OtaskperhostonTACC's

StampedeSCRATCHlesystemwhichcontains348OSTs(aggregaterates

measuredusingaxedpayloadsizeof2GBperhostwritingtoindividual

files).

aquiescentsystemshortlyafterthelesystemwasformattedsuchthatit

hadverylittlecapacityutilized.Consequently,thesetestsrepresentbest-case

lesystemperformanceasthereisnocompetingI/Otracorsignicantim-

pactfromfragmentation.TwosetsofresultsareshowninFigure7.4,which

arevariedbytheunderlyingLustrestripecountfortheleswritten.Inboth

cases,thepeaklesystemwritespeedwasseentobeover160GB/s(thecor-

respondingpeakreadrate,whichisgenerallylowerthanwriteinLustre,was

measuredtobe127GB/s).However,thepeakvaluewasobtainedatsmaller

clientcountswiththestripecountequivalentto2attheexpenseofhavinga

slightlyloweraggregateratewhenusing4,000clientsormore.Basedonthese

performancesignatures,andthefactthatastripecountvalueof2essentially

doublessingle-clientperformance,thedefaultstripecounton SCRATCHwas

set to a value of 2. System users are required to increase this striping default

to larger values when using an N-to-one approach where many clients are

performing I/O to a single file.

The results in Figure 7.4 also highlight another attractive Lustre feature in

that significant fractions of the overall file system performance can be main-

tained with large client counts. At the largest scale, the number of simultane-

ous write clients is 18 the number of available Lustre OSTs. However, the

performance at these large client counts is within 94% of the peak measured

performance and this is a primary motivating reason for deploying these types

of parallel file systems for a large, general-purpose compute system in which

many users will likely be performing I/O simultaneously.