Hardware Reference

In-Depth Information

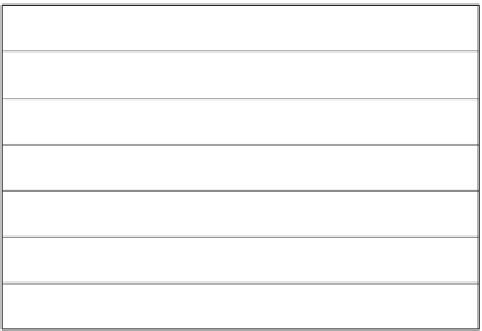

File%System%

User%Quotas%

Life%Time%

Target%Usage%

5&GB&&

150K&inodes&

Permanent user storage;

automatically backed up.

&

$HOME&

Project&

400&GB&

&3M&inodes&

Large allocated storage; not

backed up.

&

$WORK&

Project&

Large temporary storage; not

backed up, purged periodically.

$SCRATCH&

none&

14&Days&

FIGURE7.1:LustrelesystemquotasandtargetusageonTACC'sStampede

system.

containingabnormallyhighbad-sectorcounts.Aftercompletingtheindivid-

ualdiskburn-intests,thesecondstepoftheprocesswastobuildandtestthe

productionRAIDsetsthatwouldultimatelybeformattedtosupportLustre.

Thesemultipledevice(MD)RAID-leveltestsperformedlargeblockI/Oto

eachofthesixRAIDdevicesperOSSinparallelineight-hourintervals.For

thisparticularhardwareandRAID6softwareRAIDconguration,theperfor-

manceforasatisfactoryMDdevicewas595MB/s.AnyRAIDsetwhichdid

notmeetthisperformancethresholdwasre-examinedtoidentifyslowdrives

inhibitingperformance.Notethatthisapproachfollowssimilardeployment

strategiesonpreviouslarge-scalesystemswhichhaveprovedusefulinisolating

outliersanddetectinginfantmortality[4].Theentireprocesswasrepeatedun-

tiltheRAIDsetperformancewasconsistentacrossallOSSs.Todemonstrate

theresultingperformanceconsistency,Figure7.2presentsthemeasuredMD

writespeedsobtainedfortheOSSsserving HOMEandWORK. Based on

FIGURE 7.2: Average software RAID performance for Lustre OST devices

measured during disk burn-in procedure.