Information Technology Reference

In-Depth Information

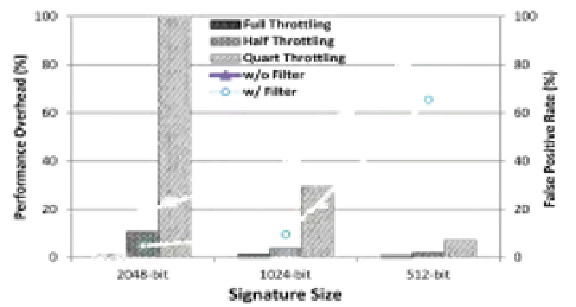

Fig. 4.

Performance and accuracy characteristics of GUARD. The graph shows (in bars) the

slowdown (%) of application being monitored for different throttling levels and signature sizes.

The graph also shows (in lines) the false positive rate (%) for different signature sizes used in

GUARD.

detection for 8-core, 16-core, and 32-core CPUs with near-zero (less than 2%) perfor-

mance overhead at

full

throttling. Table 3 shows the amount of GPU resources required

to perform data race detection, for different CPU configurations, at different throttling.

Ta b l e 3 .

Number of GPU SMs required for data race detection, for different CPU core count, at

different throttling. The GPU SM architecture is described in Section 4

CPU Core Count quart throttling half throttling full throttling

4

1

1

1

8

1

2

3

16

3

6

12

32

12

24

48

On detailed analysis of the performance of the GPU kernel, we observe that the

performance overhead of GUARD is mainly due to two reasons: (i) data accesses related

to the long signatures; and (ii) synchronization of the hundreds of threads used for H-B

comparisons. GUARD's GPU kernel stalls only for about 1.54% of its execution cycles

due to unavailability of data in any threads (memory related stalls). We see that the

signature table size is small enough to fit inside the GPU L2 cache. For a reasonable

GPU L1 data cache size, as in Table 1, the L1 data cache hit rate is more than 99%. We

also observe that GPU does a good job of

coalescing

memory accesses and limiting the

impact of data access latency on the performance of GUARD. Thread synchronizations,

on the other hand, are necessary for the correctness of H-B algorithm when mapped to

a highly parallel architecture like GPU.