Information Technology Reference

In-Depth Information

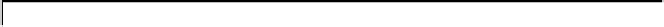

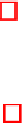

Example Orig. Lists BST PT

−

anal. PT

−

mask

PT

Splay Valgrind

binarySearch 0.01 0.51

0.62

1.59

0.53

0.53 0.64

0.27

insertionSort 0.12 1.27

1.26

3.86

1.25

1.25 1.30

2.81

matrixMult

0.01 58.48 90.43

9.01

8.57

8.75 398.60

0.48

matrixInv

0.02 21.54 29.94

1.90

1.42

1.53 145.80

0.47

quickSort

0.01 11.15 2.67

0.48

0.36

0.13 0.02

0.27

bubbleSort

0.22 4.64

7.16

32.58

7.26

6.90 7.21

3.36

merge

0.01 101.33 94.80

0.29

0.47

0.14 0.05

0.45

RedBlackTree 0.01 101.69 145.20

0.30

0.39

0.27 19.59

0.51

mergeSort

0.01

>

24h

>

24h

513.85

25.02

7.63 2.50

0.27

Fig. 9.

Detailed execution time (in sec.) for selected examples and techniques

7 Experimental Results

Performances.

To evaluate our memory monitoring solution, we performed in

total more than 300 executions for more than 30 programs obtained from about

10 examples with different levels of specifications and values of parameters. These

initial experiments were conducted on small-size examples because they were

mostly manually specified in e-acsl. We measured the execution time of the

original code and of the code instrumented by e-acsl2c with various options in

order to evaluate their performances (with and without optimizations, with four

different implementations of the store, etc.). Such indicators as the number of

monitored variables, memory allocations, records and queries in a Patricia trie

were recorded as well.

Fig. 9 presents some of these examples and indicators in detail. Its columns

give execution time of the original program, using store implementation based on

lists, binary search trees, three versions of Patricia tries and Splay trees. Patricia

tries based implementations were tested respectively without dataflow analysis

of Sec. 6, without query optimization of Sec. 5 and with both optimizations.

Time of analysis with Valgrind tool [17] is indicated in the last column.

Most experimental results have already been summarized at the end of Sec. 4,

5 and 6, where the average rates were computed for a complete list of examples

(some of which are not presented in Fig. 9). We notice in addition that the exe-

cution time with Valgrind is not comparable with our solution and may depend

on the number of memory-related annotations in the specification.

Error detection capacity.

In addition to performance evaluation, we used mu-

tational testing to evaluate the capacity of error detection using runtime as-

sertion checking with Frama-C. We considered five annotated examples (see

Fig. 10), generated their

mutants

(by performing a

mutation

in the source

code) and applied assertion checking on them. Annotations included precon-

ditions, postconditions, assertions, memory-related constructs etc., and were

written in e-acsl. Mutations included: numerical-arithmetic operator modifi-

cations, pointer-arithmetic operator modifications, comparison operator modi-

fications and logic (

land

and

lor

) operator modifications. The PathCrawler

test generation tool [18] has been used to produce test cases. Each mutant was