Database Reference

In-Depth Information

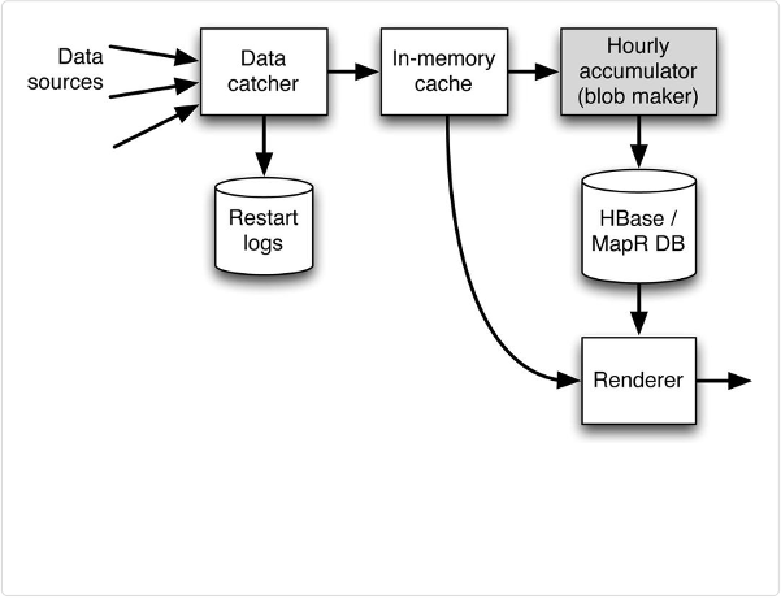

Figure 3-6. Data flow for the direct blob insertion approach. The catcher stores data in the cache

and writes it to the restart logs. The blob maker periodically reads from the cache and directly in-

serts compressed blobs into the database. The performance advantage of this design comes at the

cost of requiring access by the renderer to data buffered in the cache as well as to data already

stored in the time series database.

What are the advantages of this direct blobbing approach? A real-world example shows what

it can do. This architecture has been used to insert in excess of 100 million data points per

second into a MapR-DB table using just 4 active nodes in a 10-node MapR cluster. These

nodes are fairly high-performance nodes, with 16 cores, lots of RAM, and 12 well-con-

figured disk drives per node, but you should be able to achieve performance within a factor

of 2-5 of this level using most hardware.

This level of performance sounds like a lot of data, possibly more than most of us would

need to handle, but in

Chapter 5

we will show why ingest rates on that level can be very use-

ful even for relatively modest applications.