Graphics Reference

In-Depth Information

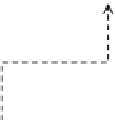

Fig. 7.3

A wrapper model for FS

Wrapper models can achieve the purpose of improving the particular learner's

predictive performance. A wrapper model consists of two stages (see Fig.

7.3

): (1)

feature subset selection, which selects the best subset using the accuracy provided

by the learning algorithm (on training data) as a criterion; (2) is the same as in the

filter model. Since we keep only the best subset in stage 1, we need to learn again the

DM model with the best subset. Stage 1 corresponds with the data reduction task.

It is well-known that the estimated accuracy using the training data may not

reflect the same accuracy on test data, so the key issue is how to truly estimate or

generalize the accuracy over non-seen data. In fact, the goal is not exactly to increase

the predictive accuracy, rather to improve overall the DM algorithm in question.

Solutions come from the usage of internal statistical validation to control the over-

fitting, ensembles of learners [

41

] and hybridizations with heuristic learning like

Bayesian classifiers or Decision Tree induction [

23

]. However, when data size is

huge, the wrapper approach cannot be applied because the learning method is not

able to manage all data.

Regarding filter models, they cannot allow a learning algorithm to fully exploit

its bias, whereas wrapper methods do.

7.2.3.3 Embedded Feature Selection

The

embedded

approach [

16

] is similar to the wrapper approach in the sense that the

features are specifically selected for a certain learning algorithm. Moreover, in this

approach, the features are selected during the learning process.