Cryptography Reference

In-Depth Information

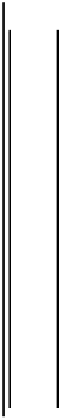

Example of Binary Encoding/Decoding

Diagram 11.1

M

E

S

S

A

G

E

N

O

I

S

Y

D

E

C

O

D

E

R

Encoder

f

(

C

)=

101010

101010

C

−−−−→

111010

−−−−−−→

101010

−−−−−−→

C

H

A

N

N

E

L

−−−−−−→

S

O

U

R

C

E

Now we maybegin to formalize these notions.

Codewords and Hamming Distance

Let

n

be defined as in Section 11.4. Then

a

code of length

n

is a nonemptysubset

C

of

M

be the message space and let

M

n

, and an element of

C

is called

M

a

codeword

.

11.5

For example, if

, the codes are binary. If

n

= 3, for

instance, and we are dealing onlywith binaryrepetition codes, then the code is

the set,

M

=

{

0

,

1

}

C

=

{

000

,

111

}

.

In general, if

|

M

|

=

q

, then the codes using the message space

M

are called

q

-

n

since a completelyarbitrary

such set would be unwieldy. Thus, we typically restrict

arycodes. We must place some restrictions on

M

F

q

for some prime power

q

=

p

m

, and the codes are therefore vectors in the vector

space

M

to be a finite field

n

.

11.6

With this notion in mind, we now need a concept of distance

between the vectors under consideration. This was given byHamming, see [117]

and [118].

The

Hamming distance

d

(

u,v

), for two vectors

u,v

M

n

is defined as

the number of components on which theydisagree. For instance, if

u

=

(1

,

0

,

1

,

0

,

1

,

0) and

v

=(1

,

1

,

1

,

0

,

1

,

0) from our above example, then

d

(

u,v

)=1,

since theydisagree on onlythe second component. Similarl, if

u

=(3

,

4

,

2

,

1)

and

v

=(3

,

2

,

2

,

0) are vectors in

∈

M

5

, then

d

(

u,v

) = 2 since theydiffer at the

second and fourth components. Hence, the Hamming distance can be employed

F

11.5

These are often called

block codes

since there exist codes where the codewords do not have

fixed length.

11.6

Such codes are typically called

linear codes

, whose

information rate

or

code rate

is given

by log

q

(

M

)

/n

. Later in this section, we will study linear codes in depth.

Search WWH ::

Custom Search