Biomedical Engineering Reference

In-Depth Information

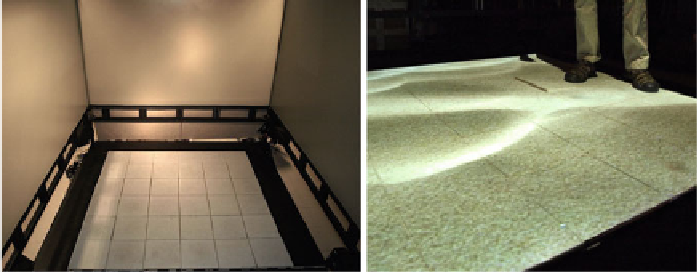

Fig. 17.6

The floor interface is situated within an immersive, rear-projected virtual environment

simulator.

Right

visual feedback is provided by

top

-

down

video projection (in the instance shown,

Limited research to date has addressed the usability of floor-based touch inter-

faces. However, since the feet play very different roles in human movement than the

hands do, the extent to which the kinds of techniques that have been adopted for

use with the fingers may be useful for interaction via the feet is questionable and

requires further investigation. For example, when users are standing, there are strong

constraints on the placement of the user's feet, due to the need to maintain stability.

In addition, there are obvious anatomical differences between the hands and feet,

with toes rarely used for prehensile tasks. Nonetheless, human movement research

has studied foot movement control in diverse settings. Visually guided targeting with

the foot has been found to be effectively modeled by a similar version of Fitts' Law

as is employed for modeling hand movements, with an execution time about twice

as long for a similar hand movement [

15

]. However, for many interfaces, usability is

manifestly co-determined by both operator and device limitations (e.g., sensor noise

or inaccuracy), imposing a window on both.

Augsten et al. studied users' performance in selecting keys using a touch-screen

keyboard projected on the floor using precision optical motion capture tracking of

the foot location, a visual crosshair display to indicate the location that was being

pointed to, and a pressure threshold to determine pressing. They found that users

were able to select target buttons (keyboard keys) having one of the three dimensions

1

8 cm with respective error rates of 28.6, 9.5, or 3.0 %.

Sensing accuracy was not the limiting factor in this study, since the motion capture

input provided sub-millimeter accuracy. The authors undertook further studies to

determine users' preferences with respect to the part of the foot to be used as a

pointer for selection, and to determine the extent to which non-intentional stepping

actions could be discriminated from volitional selection operations.

Visell et al. investigated the usability of a floor interface consisting of an array of

instrumented tiles, by assessing users' abilities to select on-screen virtual buttons of

different sizes presented at different distances and directions [

39

]. This example is

described further in the following sections.

.

1

×

1

.

7, 3

.

1

×

3

.

5, or 5

.

3

×

5

.