Biomedical Engineering Reference

In-Depth Information

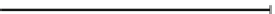

Fig. 12.5

Example footstep

normal force and synthesized

waveform using the simple

normal force texture algo-

rithm described in the text.

The respective signals were

captured through force and

acceleration sensors inte-

grated in the vibrotactile

display device [

108

]

7.0

0.0

-7.0

0.8

0.4

0.0

1 sec

governed by:

F

(

t

)

=

m

x

¨

+

b

x

˙

+

K

(

x

−

x

0

),

x

0

=

k

2

ξ(

t

)/

K

(12.3)

In the stuck state, virtual surface admittance

Y

(

s

)

=˙

x

(

s

)/

F

(

s

)

is given, in the

Laplace-transformed (

s

) domain, by:

ms

2

)

−

1

Y

(

s

)

=

s

(

+

bs

+

K

,

K

=

k

1

k

2

ξ/(

k

1

+

k

2

)

(12.4)

where

represents the net plastic displacement up to time

t

. A Mohr-Coulomb

yield criterion is applied to determine slip onset: when the force on the plastic unit

exceeds a threshold value (which may be constant or noise-dependent), a slip event

generates an incremental displacement

ξ(

t

)

Δξ(

t

)

, along with an energy loss of

Δ

W

representing the inelastic work of fracture growth.

Slip displacements are rendered as discrete transient signals, using an event-based

approach [

55

]. High frequency components of such transient mechanical events are

known to depend on the materials and forces of interaction, and we model some of

these dependencies when synthesizing the transients [

110

]. An example of normal

force texture resulting from a footstep load during walking is shown in Fig.

12.5

.

12.3.3 Multimodal Displays

Several issues arise in the rendering of multimodal walking interactions, including

combinations of visual, auditory and haptic rendering to enable truly multimodal

experiences. A model of the global rendering loop for interactive multimodal expe-

riences is summarized in Fig.

12.6

. Walking over virtual grounds requires the use of

specific hardware devices that can coherently present visual, vibrotactile and acoustic

signals. Several devices dedicated to multimodal rendering of walking over virtual

grounds are described below, in Sect.

12.3.4

, while examples of multimodal sce-

narios are discussed in Sect.

12.3.5

. Multimodal rendering is often complicated due

to hardware and software constraints. In some cases, crossmodal perceptual effects