Biomedical Engineering Reference

In-Depth Information

sequence of symbols, acting as a stochastic state machine that generates a symbol each time a

transition is made from one state to the next. Transitions between states are specified by transition

probabilities. A Markov process is a process that moves from state to state depending on the

previous

n

states. The process is called an

order n

model where

n

is the number of states affecting

the choice of the next state. The Markov process considered here is a first order, in that the

probability of a state is dependent only on the directly preceding state.

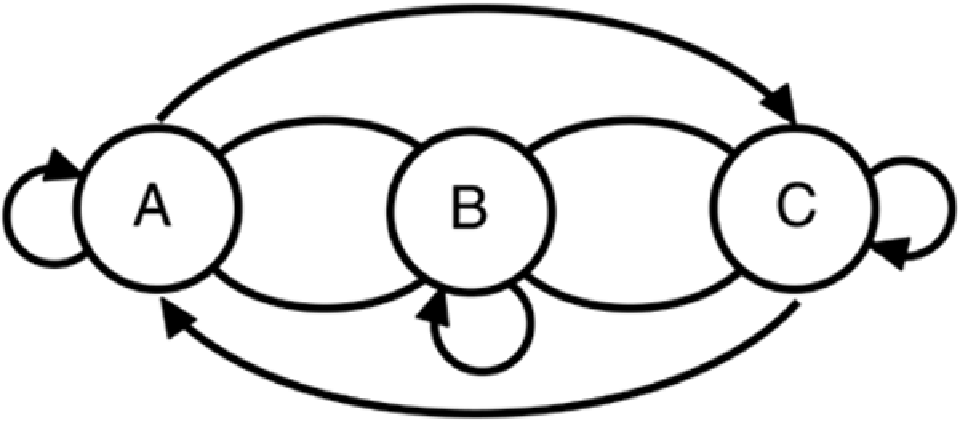

In order to understand HMMs, consider the concept of a Markov Chain, which is a process that can be

in one of a number of states at any given time (see

Figure 7-13

). Each state generates an

observation, from which the state sequence can be inferred. A Markov Chain is defined by the

probabilities for each transition in state occurring, given the current state. That is, a Markov Chain is

a non-deterministic system in which it is assumed that the probability of moving from one state to

another doesn't vary with time. A HMM is a variation of a Markov Chain in which the states in the

chain are hidden.

Figure 7-13. Markov Chain. (A), (B), and (C) represent states, and the

arrows connecting the states represent transitions.

Like a neural network classifier, a HMM must be trained before it can be used. Training establishes

the transition probabilities for each state in the Markov Chain. When presented with data in the

database, the HMM provides a measure of how close the data patterns—sequence data, for

example—resemble the data used to train the model. HMM-based classifiers are considered

approximations because of the often unrealistic assumptions that a state is dependent only on

predecessors and that this dependence is time-independent.