Database Reference

In-Depth Information

data analysis, the employment of exhaustive search, and thus the guaranteed

finding of the global optimum, will be infeasible.

In addition to first-order selection schemes, for more features, heuris-

tic search strategies employing tree search schemes, e.g., Sequential For-

ward/Backward Selection (SFS/SBS) were devised [2.16]. These are also im-

plemented in the method collection and corresponding toolbox within the

QuickCog system [2.28]. These heuristic approaches systematically reduce

the number of searched and assessed feature combinations. As many combi-

nations are left out of consideration, the global optimum can be missed, and

convergence to just a local optimum solution for the selection problem is guar-

anteed. In SFS, for instance, initially no features are selected. Now each of

the

N

features is tentatively selected and its effect on the figure of merit, e.g.,

class regions overlap, is computed. The feature with the best assessment is

permanently selected and frozen. The same procedure is iteratively repeated

for the remaining (

N −

1) features until only one feature can be altered. Now

either the feature combination with the best assessment value can be se-

lected, regardless of the number of selected features, or for a fixed maximum

number of features the row with the best compromise of assessment value

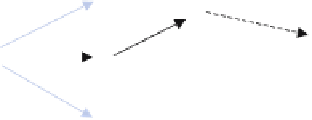

and required minimum number of features will be selected. In SBS, the same

process starts from the initial condition that all features are selected and get

rejected in the process. Figure 2.16 elucidates the SBS process and Table 2.3

shows an example of a selection process protocol for Iris train data using SBS

[2.16] and the

q

s

quality measure [2.28]. As

M ∗

1)

/

2+

M

combinations

have to be assessed in both cases, the computational complexity is given by

O(

M

2

). Thus, for

M

= 16, in this case, a local optimum solution will be

found within approximately 4 seconds compared to more than 18 hours for

an exhaustive search. Though the finding of a global optimum is not guaran-

teed, these robust methods provide good solutions quickly, and in practical

work the global optimum was often found.

9

Comparing these heuristic meth-

ods with the simple first-order selection schemes, it can be stated with some

(

M −

9

These were cases where an exhaustive search for result comparison was still

feasible.

Permanent removal of the

feature maximizing

J

Tentative removal of

each active feature

1 features active

M features active

M-2 features active

M-1 features active

Fig. 2.16.

Illustration of SBS feature selection.

Search WWH ::

Custom Search