Database Reference

In-Depth Information

A

A = 'F'

A = 'T'

B = 'F'

0.23

0.5

C

B

B = 'F',

B = 'F',

B = 'T',

B = 'T',

C = 'F'

C = 'T'

C = 'F'

C = 'F'

D

D = 'F'

0.65

0.54

0.78

0.01

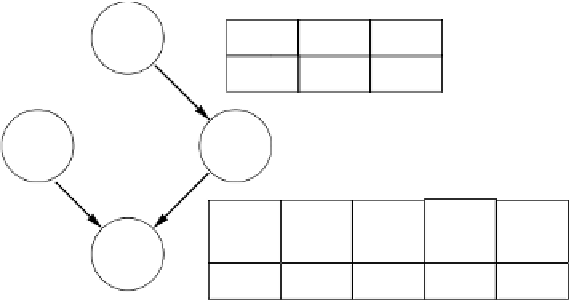

Fig. 6.1.

A Bayesian network example.

the learning problem, which is an intractable problem. In the learning prob-

lem, the objective is to construct a Bayesian network that best describes a

given set of observations about the domain. There are two major approaches

to tackle the problem: the dependency analysis and the search-and-scoring

approaches [6.4]. In short, the dependency analysis approach constructs a

network by discovering the dependency information from data. The search-

and-scoring approach, on the other hand, searches for the optimal network

according to a metric that evaluates the goodness of a candidate network

with reference to the data. While the two approaches try to learn Bayesian

networks differently, they both suffer from their respective drawbacks and

shortcomings. For the former, a straightforward implementation would re-

quire an exponential number of tests [6.5]. Worse still, some test results may

be unreliable [6.5]. For the latter, the search space is often huge, and it is

di

cult to find a good solution.

In this chapter, we propose a hybrid learning framework that combines

the merits of both approaches. The hybrid framework consists of two phases:

the conditional independence test (CI test) phase and the search phase. In

essence, the main idea is that we exploit the information discovered by de-

pendency analysis (CI test phase) to reduce the search space in the search

phase. To tackle the search problem, the idea of cooperative coevolution [6.6],

[6.7], [6.8], which is a modular decomposition evolutionary search approach,

is employed. Our new approach for learning Bayesian networks is called the

cooperative coevolution genetic algorithm (CCGA).

We compare CCGA with another existing learning algorithm, MDLEP [6.9];

CCGA executes much faster. Moreover, CCGA usually performs better in

discovering the original structure that generates the training data.

This chapter is organized as follows. In Section 6.2, we present the back-

grounds of Bayesian networks, the MDL metric, and cooperative coevolution.

Search WWH ::

Custom Search