Information Technology Reference

In-Depth Information

parameters

- exogenous

- endogenous

- population level

- individual level

- component level

parameter setting

parameter tuning

parameter control

by hand

DOE

metaevolutionary

deterministic

adaptive

self-adaptive

human

experience

sequential parameter

optimization

response surface

modeling

metaevolutionary

angle control ES

coevolutionary

approaches

dynamic

penalty

function

annealing

penalty

function

Rechenberg's

1/5th rule

covariance matrix

adaptation (CMS/CSA)

step size control

of ES

SA-PMX

methods

hybridization

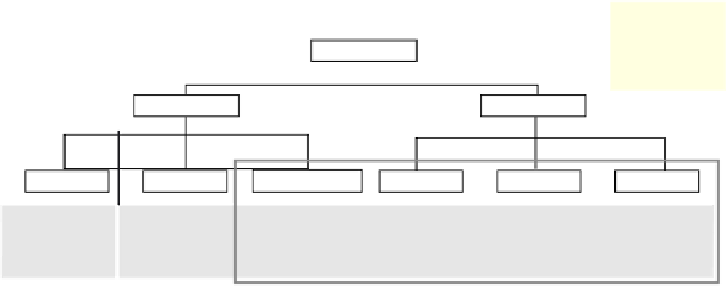

Fig. 3.1.

An extended taxonomy of parameter setting techniques based on the taxon-

omy of Eiben [37], complemented on the parameter tuning branch. For each parameter

setting class a couple of methods are presented exemplarily.

3.2.2 Parameter Tuning

Many parameters for EAs are static, which means that once defined they are not

changed during the optimization process. Typical examples for static parame-

ters are population sizes or initial strategy parameter values. Examples for static

parameters of heuristic extensions are static penalty functions. In the approach

of Homaifar, Lai and Qi [60], the search domain is divided into a fixed num-

ber of areas assigned with static penalties representing the constraint violation.

The disadvantage of setting the parameters statically is the lack of flexibility

concerning useful adaptations during the search process.

By Hand

In most cases static parameters are set by hand. Hence, their influence on the

behavior of the EA depends on human experience. Even though the user defined

settings might not be the optimal ones.

Design of Experiments, Relevance Estimation and Value Calibration

Design of experiments (DOE) offers the practitioner a way of determining opti-

mal parameter settings. It starts with the determination of the objectives of an

experiment and the selection of the parameters (factors) for the study. The qual-

ity of the experiment (response) guides the search to find appropriate settings.

Recently Bartz-Beielstein et al. [5] developed a parameter tuning method for

stochastically disturbed algorithm output, the sequential parameter optimiza-

tion (SPO). It combines classical regression methods and statistical approaches

for deterministic algorithms like design and analysis of computer experiments.

Search WWH ::

Custom Search