Database Reference

In-Depth Information

set

{

1

,

2

,...,M}

of possible classes. Each

θ

i

is considered to be the index of the

category to which the

x

i

is the outcome of the

set of measurements made upon that individual. We use to say that ”

i

th individual belongs, and each

x

i

belongs

θ

i

” when we mean precisely that the

i

to

th individual, upon which measurements

x

i

have been observed, belongs to category

θ

i

.

x, θ

x

Anewpair(

) is given, where only the measurement

is observable, and it

is desired to estimate

by using the information contained in the set of correctly

classified points. We shall call

θ

x

n

∈ x

1

,x

2

,...,x

n

the nearest neighbor of

x

if

x

n

,x

min

d

(

x

i

,x

)=

d

(

)

,

i

=1

,

2

,...,n

θ

n

of its nearest

The NN classification decision method gives to

x

the category

x

n

. In case of tie for the nearest neighbor, the decision rule has to be

modified in order to break it. A mistake is made if

neighbor

θ

n

.

An immediate extension to this decision rule is the so called

k

-NN approach

[4], which assigns to the candidate

=

θ

x

the class which is most frequently rep-

resented in the

k

nearest neighbors to

x

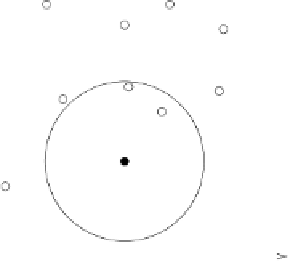

. In Figure 3 , for example, the 3-NN

decision rule would decide

x

as belonging to class

θ

o

because two of the three

nearest neighbors of

θ

o

.

Much research has been devoted to the

K

-NN rule [6]. One of the most im-

portant results is that

K

-NN has asymptotically very good performance. Loosely

speaking, for a very large design set, the expected probability of incorrect clas-

sifications (error)

x

belongs to class

R

achievable with

K

-NN is bounded as follows:

0 Class Case

+

+

+

+

+

Candidate

+ Class case

+

Fig. 3.

3-NN classification method. A voting method has to be implemented to take

the final decision. The classification given in this example by simple voting would be

class=circle.

Search WWH ::

Custom Search