Information Technology Reference

In-Depth Information

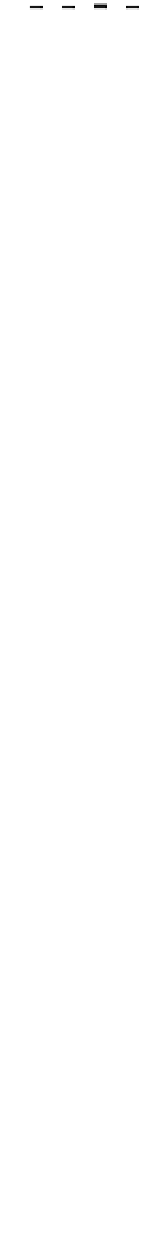

(a)

(b)

Fig. 5.

(a) average memory accesses produced per update on the GPU. (b) overall

update overhead on both the CPU and the GPU per update.

GALE. Such a significant improvement benefits from our mechanism essentially.

Actually, in TSTT, all produced unit modifications cover all update information

without any redundancy. So, excluding any of them must lose some update

information. In another word, the number of memory modifications for on-line

updates are minimized by TSTT.

4.2 Overall Update Overhead

Then, we take into account the overall update overhead (in time cost) for both

off-line updates (on the CPU) and on-line updates (on the GPU). Since TSTT's

on-line update overhead are too small, we

times it by

10

3

.

As shown in Fig. 5(b), the average update overhead per update of TSTT's

on-line updates is only 0

.

72

ns

in the worst case, achieving a speedup than

GALE by a factor over 5000. Such a fast speed benefits from that the number

of produced unit modifications in TSTT are minimized, and all of them can be

processed in parallel on the GPU.

However, processing off-line updates in TSTT costs more time than that in

GALE by a factor of 2

.

3

3

.

4. That's because some additional operations are

required in TSTT, such as leaf pushing and list management. Even though,

in comparison with GALE, TSTT's overall update overhead is still reduced by

89

.

6%

∼

93

.

5%, which demonstrates clearly that TSTT's update mechanism is

more ecient.

∼

4.3 Comprehensive Performance

In order to evaluate the comprehensive performance, we process a 16

M

gener-

ated lookup requests in TSTT and GALE respectively, with update frequency

increasing to 100

,

000

updates/s

. Then, we measure the throughputs with Million

Lookups Per Second (MLPS) in all cases.

As presented in Fig. 6, without any updates, TSTT provides the same

throughput (539

MLPS

) as GALE. That's because their lookup approaches are