Java Reference

In-Depth Information

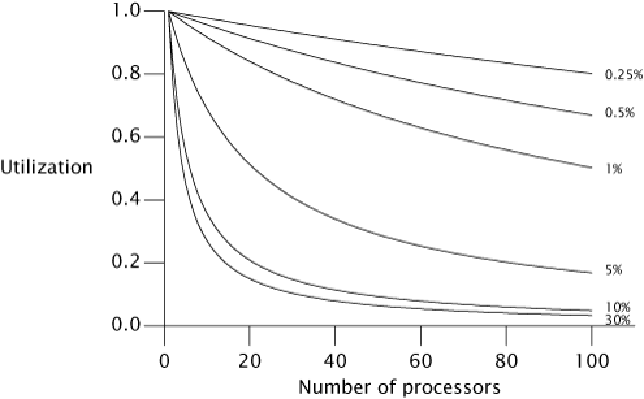

10%serialization can achieve at most a speedup of 5.3 (at 53% utilization), and with 100 pro-

cessors it can achieve at most a speedup of 9.2 (at 9% utilization). It takes a lot of inefficiently

utilized CPUs to never get to that factor of ten.

Chapter 6

explored identifying logical boundaries for decomposing applications into tasks.

But in order to predict what kind of speedup is possible from running your application on a

multiprocessor system, you also need to identify the sources of serialization in your tasks.

a shared work queue and processing them; assume that tasks do not depend on the results or

side effects of other tasks. Ignoring for a moment how the tasks get onto the queue, how well

will this application scale as we add processors? At first glance, it may appear that the applic-

ation is completely parallelizable: tasks do not wait for each other, and the more processors

available, the more tasks can be processed concurrently. However, there is a serial component

as well—fetching the task from the work queue. The work queue is shared by all the worker

threads, and it will require some amount of synchronization to maintain its integrity in the

face of concurrent access. If locking is used to guard the state of the queue, then while one

thread is dequeing a task, other threads that need to dequeue their next task must wait—and

this is where task processing is serialized.