Java Reference

In-Depth Information

ing aren't important; we're interested in characterizing the concurrency of various scheduling

policies.

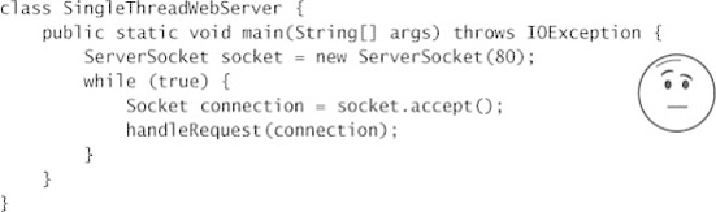

Listing 6.1. Sequential Web Server.

SingleThreadedWebServer

is simple and theoretically correct, but would perform

poorly in production because it can handle only one request at a time. The main thread altern-

ates between accepting connections and processing the associated request. While the server

is handling a request, new connections must wait until it finishes the current request and calls

accept

again. This might work if request processing were so fast that

handleRequest

effectively returned immediately, but this doesn't describe any web server in the real world.

Processing a web request involves a mix of computation and I/O. The server must perform

socket I/O to read the request and write the response, which can block due to network conges-

tion or connectivity problems. It may also perform file I/O or make database requests, which

can also block. In a single-threaded server, blocking not only delays completing the current

request, but prevents pending requests from being processed at all. If one request blocks for

an unusually long time, users might think the server is unavailable because it appears un-

responsive. At the same time, resource utilization is poor, since the CPU sits idle while the

single thread waits for its I/O to complete.

In server applications, sequential processing rarely provides either good throughput or good

responsiveness. There are exceptions—such as when tasks are few and long-lived, or when

the server serves a single client that makes only a single request at a time—but most server

applications do not work this way.

[1]