Information Technology Reference

In-Depth Information

with the dropout technique. Further, in order to check whether pre-training helps

to combat overfitting we trained two sets of networks, one with and one without

pre-training. In all cases, the weights were initialized with values sampled from a

uniform distribution in the range

"

s

s

#

6

N

in

N

out

; C4

6

N

in

N

out

4

;

(19.26)

where

N

in

is the number of input units and

N

out

the number of output units.

The networks were trained on the training set using standard SGD with momen-

tum and early stopping determined on the development set. As the cost function

we used cross entropy (CE) for the classification task and the mean squared error

(MSE) for the regression task. As soon as the cost function started to raise on the

development set the training was stopped.

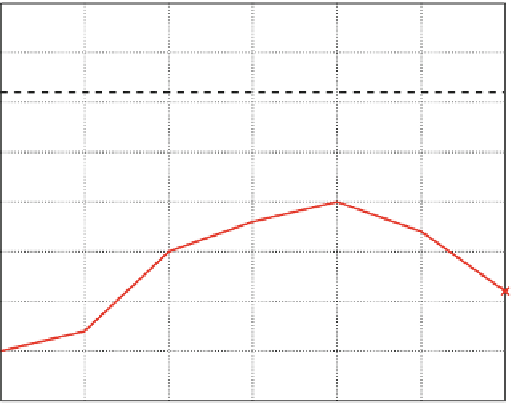

Figure

19.5

shows the best test set results obtained on the regression task for

different network sizes.

Several conclusions can be drawn from this figure: first, the ReLu networks out-

perform the sigmoid networks for all hidden layer sizes, except for small networks

with hidden unit sizes

< 512

. This might be due to the strong regularization effect

of dropout that surmounts the regularization effect of pre-training. Second, pre-

training helps in all cases, regardless of whether sigmoid units or rectified linear

83.5

baseline

relu & dropout (no pretrain)

relu & dropout (pretrain)

sigmoid (pretrain)

sigmoid (no pretrain)

83

82.5

82

81.5

81

80.5

80

79.5

128

256

512

1024

2048

3072

4096

# hidden units

Fig. 19.5

Regression task: test set results based on the baseline feature set I for a one-hidden layer

MLP for varying hidden layer sizes. Shown are the graphs for networks trained with rectified linear

units and dropout vs. sigmoid hidden units and with vs. without pre-training the networks

Search WWH ::

Custom Search