Geoscience Reference

In-Depth Information

3.5.2

Transformation by principle of uncertainty invariance

The principle of uncertainty invariance, which is based on measures of uncertainty in

various theories, provides some basis for establishing meaningful relationships between

uncertainty theories. In particular, the transformation from one theory, say

T

1

to another,

say

T

2

, with the principle of uncertainty invariance requires that (Klir and Yuan, 1995;

Klir and Wierman, 1998):

1. The amount of uncertainty associated with a given piece of information or a

given situation be preserved when we move from

T

1

to

T

2

.

2. The degree of belief in

T

1

be converted to its counterparts in

T

2

by an appropriate

scale.

The requirement 1 guarantees that no uncertainty is added or eliminated solely by

changing the mathematical theory by which a particular phenomenon is formalised.

Therefore the amount of uncertainty measured before and after the conversion based on

the respective theories must remain equal. The requirement 2 guarantees that certain

properties, which are considered essential in a given context (such as ordering or

proportionality of relevant values) are preserved by the transformation.

The transformation under discussion here is from probability (defined by a PDF) to

possibility (defined by a MF as a possibility distribution) and vice versa. The expressions

for the uncertainty measures in the probability and possibility theories are given below. A

detailed coverage on these measures is beyond the scope of this thesis. The readers are

referred to, e.g. Klir and Yuan (1995) and Klir and Wierman (1998), Ayyub (2001) for

more coverage on this topic.

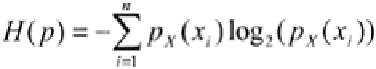

Uncertainty measures in probability theory

When uncertainty is formalised in terms of probability theory, the only measure of

uncertainty applicable is the

Shannon entropy measure

(Klir, 1992). The

Shannon entropy

measure

given by Shannon (1948) measures uncertainty due to conflict that arises from

probability mass function of a body of information (Ayyub, 2001). This uncertainty is

also referred to as

strife

or

discord

. More specifically, it measures the average uncertainty

(in bits) associated with the prediction of outcomes in a random experiment. The

Shannon entropy,

represented by

H(p),

is given by

(3 .40)

As can be observed in the

H(p)

function, its range is form 0 (for

p

X

(x)

=1

, x

X

) to log

2

|

X

|

(for uniform probability distribution with

p

X

(x)

=1/|

X

| for all

x X

).

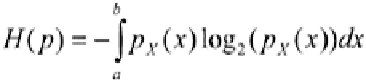

For the continuous random variable, the Shannon entropy can be extended to

(3.41)

Search WWH ::

Custom Search