Image Processing Reference

In-Depth Information

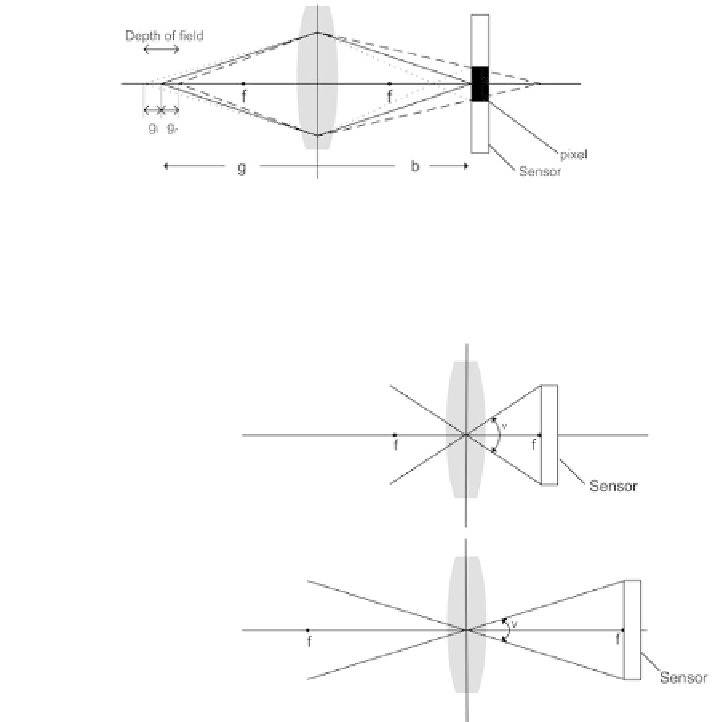

Fig. 2.9

Depth-of-field. The

solid lines

illustrate two light rays from an object (a point) on the

optical axis and their paths through the lens and to the sensor where they intersect within the same

pixel (illustrated as a

black rectangle

). The

dashed

and

dotted lines

illustrate light rays from two

other objects (points) on the optical axis. These objects are characterized by being the most extreme

locations where the light rays still enter the same pixel

Fig. 2.10

The field-of-view

of two cameras with different

focal lengths. The

field-of-view is an angle, V,

which represents the part of

the world observable to the

camera. As the focal length

increases so does the distance

from the lens to the sensor.

This in turn results in a

smaller field-of-view. Note

that both a horizontal

field-of-view and a vertical

field-of-view exist. If the

sensor has equal height and

width these two

fields-of-view are the same,

otherwise they are different

where the focal length,

f

, and width and height are measured in mm. So, if we have

a physical sensor with width

=

14 mm, height

=

10 mm and a focal length

=

5 mm,

then the fields-of-view will be

tan

−

1

7

5

108

.

9

◦

,

tan

−

1

(

1

)

90

◦

FOV

x

=

2

·

=

FOV

y

=

2

·

=

(2.5)

Another parameter influencing the depth-of-field is the

aperture

. The aperture

corresponds to the human iris, which controls the amount of light entering the hu-

man eye. Similarly, the aperture is a flat circular object with a hole in the center

with adjustable radius. The aperture is located in front of the lens and used to con-

trol the amount of incoming light. In the extreme case, the aperture only allows

rays through the optical center, resulting in an infinite depth-of-field. The downside

is that the more light blocked by the aperture, the lower

shutter

speed (explained

below) is required in order to ensure enough light to create an image. From this it

follows that objects in motion can result in blurry images.