Hardware Reference

In-Depth Information

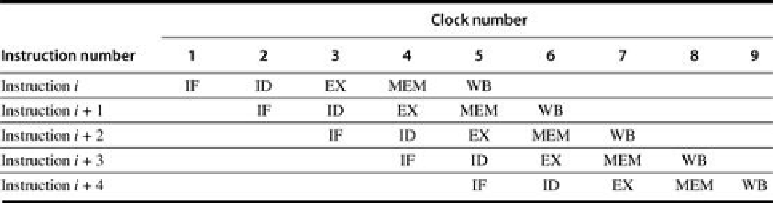

FIGURE C.1

Simple RISC pipeline

. On each clock cycle, another instruction is fetched and

begins its five-cycle execution. If an instruction is started every clock cycle, the performance

will be up to five times that of a processor that is not pipelined. The names for the stages in

the pipeline are the same as those used for the cycles in the unpipelined implementation: IF =

instruction fetch, ID = instruction decode, EX = execution, MEM = memory access, and WB =

write-back.

You may find it hard to believe that pipelining is as simple as this; it's not. In this and the fol-

lowing sections, we will make our RISC pipeline “real” by dealing with problems that pipelin-

ing introduces.

To start with, we have to determine what happens on every clock cycle of the processor and

make sure we don't try to perform two different operations with the same data path resource

on the same clock cycle. For example, a single ALU cannot be asked to compute an efective

address and perform a subtract operation at the same time. Thus, we must ensure that the

overlap of instructions in the pipeline cannot cause such a conflict. Fortunately, the simpli-

city of a RISC instruction set makes resource evaluation relatively easy.

Figure C.2

shows a

simpliied version of a RISC data path drawn in pipeline fashion. As you can see, the major

functional units are used in different cycles, and hence overlapping the execution of multiple

instructions introduces relatively few conflicts. There are three observations on which this fact

rests.

Search WWH ::

Custom Search