Hardware Reference

In-Depth Information

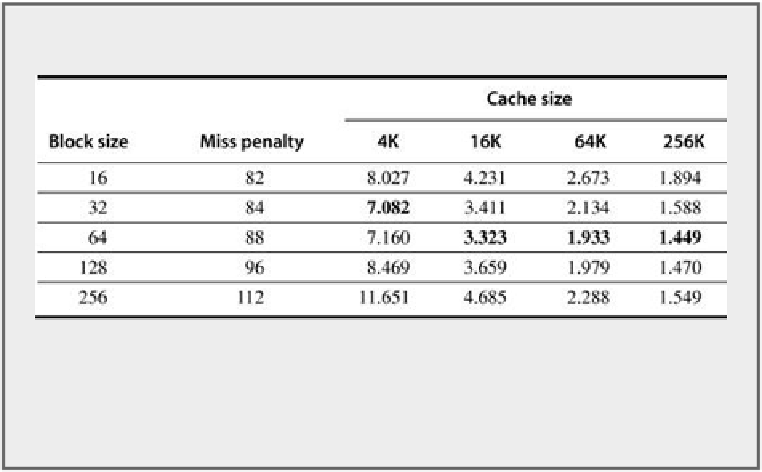

FIGURE B.12

Average memory access time versus block size for five

different-sized caches in

Figure B.10

. Block sizes of 32 and 64 bytes domin-

ate. The smallest average time per cache size is boldfaced.

As in all of these techniques, the cache designer is trying to minimize both the miss rate and

the miss penalty. The selection of block size depends on both the latency and bandwidth of

the lower-level memory. High latency and high bandwidth encourage large block size since

the cache gets many more bytes per miss for a small increase in miss penalty. Conversely, low

latency and low bandwidth encourage smaller block sizes since there is litle time saved from

a larger block. For example, twice the miss penalty of a small block may be close to the penalty

of a block twice the size. The larger number of small blocks may also reduce conflict misses.

Note that

Figures B.10

and

B.12

show the difference between selecting a block size based on

minimizing miss rate versus minimizing average memory access time.

After seeing the positive and negative impact of larger block size on compulsory and capa-

city misses, the next two subsections look at the potential of higher capacity and higher asso-

ciativity.

Second Optimization: Larger Caches To Reduce Miss Rate

The obvious way to reduce capacity misses in

Figures B.8

and

B.9

is to increase capacity of the

cache. The obvious drawback is potentially longer hit time and higher cost and power. This

technique has been especially popular in off-chip caches.

Third Optimization: Higher Associativity To Reduce Miss Rate

Figures B.8

and

B.9

show how miss rates improve with higher associativity. There are two

general rules of thumb that can be gleaned from these figures. The first is that eight-way set

associative is for practical purposes as effective in reducing misses for these sized caches as

fully associative. You can see the difference by comparing the eight-way entries to the capacity

miss column in

Figure B.8

,

since capacity misses are calculated using fully associative caches.

Search WWH ::

Custom Search