Hardware Reference

In-Depth Information

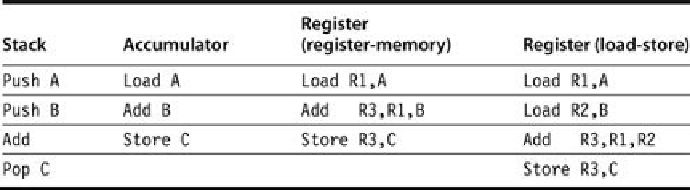

FIGURE A.2

The code sequence for

C = A + B

for four classes of instruction sets

. Note

that the

Add

instruction has implicit operands for stack and accumulator architectures and ex-

plicit operands for register architectures. It is assumed that A, B, and C all belong in memory

each class of architecture.

As the figures show, there are really two classes of register computers. One class can access

memory as part of any instruction, called

register-memory

architecture, and the other can ac-

cess memory only with load and store instructions, called

load-store

architecture. A third class,

not found in computers shipping today, keeps all operands in memory and is called a

memory-

memory

architecture. Some instruction set architectures have more registers than a single accu-

mulator but place restrictions on uses of these special registers. Such an architecture is some-

times called an

extended accumulator

or

special-purpose register

computer.

Although most early computers used stack or accumulator-style architectures, virtually

every new architecture designed after 1980 uses a load-store register architecture. The major

reasons for the emergence of general-purpose register (GPR) computers are twofold. First, re-

gisters—like other forms of storage internal to the processor—are faster than memory. Second,

registers are more efficient for a compiler to use than other forms of internal storage. For ex-

ample, on a register computer the expression

(A * B) - (B * C) - (A * D)

may be evaluated by

doing the multiplications in any order, which may be more efficient because of the location of

puter the hardware must evaluate the expression in only one order, since operands are hidden

on the stack, and it may have to load an operand multiple times.

More importantly, registers can be used to hold variables. When variables are allocated to

registers, the memory traffic reduces, the program speeds up (since registers are faster than

memory), and the code density improves (since a register can be named with fewer bits than

can a memory location).

As explained in

Section A.8

, compiler writers would prefer that all registers be equivalent

and unreserved. Older computers compromise this desire by dedicating registers to special

uses, effectively decreasing the number of general-purpose registers. If the number of truly

general-purpose registers is too small, trying to allocate variables to registers will not be prof-

itable. Instead, the compiler will reserve all the uncommited registers for use in expression

evaluation.

How many registers are sufficient? The answer, of course, depends on the effectiveness of

the compiler. Most compilers reserve some registers for expression evaluation, use some for

parameter passing, and allow the remainder to be allocated to hold variables. Modern com-

piler technology and its ability to effectively use larger numbers of registers has led to an in-

crease in register counts in more recent architectures.

Search WWH ::

Custom Search