Hardware Reference

In-Depth Information

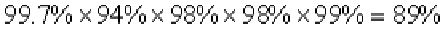

5. The connectors, breakers, and electrical wiring to the server have a collective efficiency of

99%.

WSCs outside North America use different conversion values, but the overall design is similar.

Puting it all together, the eiciency of turning 115,000-volt power from the utility into

208-volt power that servers can use is 89%:

This overall eiciency leaves only a litle over 10% room for improvement, but as we shall

see, engineers still try to make it beter.

There is considerably more opportunity for improvement in the cooling infrastructure. The

computer room air-conditioning (CRAC) unit cools the air in the server room using chilled

water, similar to how a refrigerator removes heat by releasing it outside of the refrigerator.

As a liquid absorbs heat, it evaporates. Conversely, when a liquid releases heat, it condenses.

Air conditioners pump the liquid into coils under low pressure to evaporate and absorb heat,

which is then sent to an external condenser where it is released. Thus, in a CRAC unit, fans

push warm air past a set of coils filled with cold water and a pump moves the warmed water

to the external chillers to be cooled down. The cool air for servers is typically between 64°F

and 71°F (18°C and 22°C).

Figure 6.10

shows the large collection of fans and water pumps that

move air and water throughout the system.

Clearly, one of the simplest ways to improve energy efficiency is simply to run the IT equip-

ment at higher temperatures so that the air need not be cooled as much. Some WSCs run their

equipment considerably above 71°F (22°C).

In addition to chillers, cooling towers are used in some datacenters to leverage the colder

outside air to cool the water before it is sent to the chillers. The temperature that maters

is called the

wet-bulb temperature

. The wet-bulb tem-perature is measured by blowing air on

the bulb end of a thermometer that has water on it. It is the lowest temperature that can be

achieved by evaporating water with air.

Warm water flows over a large surface in the tower, transferring heat to the outside air via

evaporation and thereby cooling the water. This technique is called

airside economization

. An al-

ternative is use cold water instead of cold air. Google's WSC in Belgium uses a water-to-water

intercooler that takes cold water from an industrial canal to chill the warm water from inside

the WSC.

Airlow is carefully planned for the IT equipment itself, with some designs even using air-

low simulators. Efficient designs preserve the temperature of the cool air by reducing the

chances of it mixing with hot air. For example, a WSC can have alternating aisles of hot air

and cold air by orienting servers in opposite directions in alternating rows of racks so that hot

exhaust blows in alternating directions.

In addition to energy losses, the cooling system also uses up a lot of water due to evapora-

tion or to spills down sewer lines. For example, an 8 MW facility might use 70,000 to 200,000

gallons of water per day.

The relative power costs of cooling equipment to IT equipment in a typical datacenter [

Bar-

roso and Hölzle 2009

]

are as follows:

■ Chillers account for 30% to 50% of the IT equipment power.

■ CRAC accounts for 10% to 20% of the IT equipment power, due mostly to fans.

Surprisingly, it's not obvious to figure out how many servers a WSC can support after you

subtract the overheads for power distribution and cooling. The so-called

nameplate power rat-

Search WWH ::

Custom Search