Hardware Reference

In-Depth Information

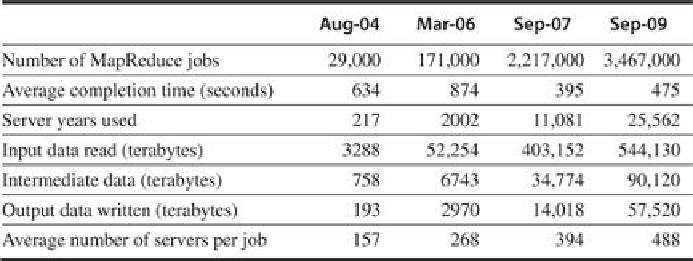

FIGURE 6.2

Annual MapReduce usage at Google over time

. Over five years the number

of MapReduce jobs increased by a factor of 100 and the average number of servers per job

increased by a factor of 3. In the last two years the increases were factors of 1.6 and 1.2, re-

spectively [

Dean 2009

]

.

Figure 6.16

on page 459 estimates that running the 2009 workload on

Amazon's cloud computing service EC2 would cost $133M.

For example, one MapReduce program calculates the number of occurrences of every Eng-

lish word in a large collection of documents. Below is a simplified version of that program,

which shows just the inner loop and assumes just one occurrence of all English words found

in a document [

Dean and Ghemawat 2008

]

:

map(String key, String value):

// key: document name

// value: document contents

for each word w in value:

EmitIntermediate(w, “1”); // Produce list of all words

reduce(String key, Iterator values):

// key: a word

// values: a list of counts

int result = 0;

for each v in values:

result += ParseInt(v); // get integer from key-value pair

Emit(AsString(result));

The function

EmitIntermediate

used in the Map function emits each word in the document and

the value one. Then the Reduce function sums all the values per word for each document us-

ing ParseInt() to get the number of occurrences per word in all documents. The MapReduce

runtime environment schedules map tasks and reduce task to the nodes of a WSC. (The com-

plete version of the program is found in

Dean and Ghemawat [2004]

.

)

MapReduce can be thought of as a generalization of the single-instruction, multiple-data

is followed by a function that is used in a reduction of the output from the Map task. Because

reductions are commonplace even in SIMD programs, SIMD hardware often offers special op-

erations for them. For example, Intel's recent AVX SIMD instructions include “horizontal” in-

structions that add pairs of operands that are adjacent in registers.

To accommodate variability in performance from thousands of computers, the MapReduce

scheduler assigns new tasks based on how quickly nodes complete prior tasks. Obviously, a

single slow task can hold up completion of a large MapReduce job. In a WSC, the solution to

Search WWH ::

Custom Search