Hardware Reference

In-Depth Information

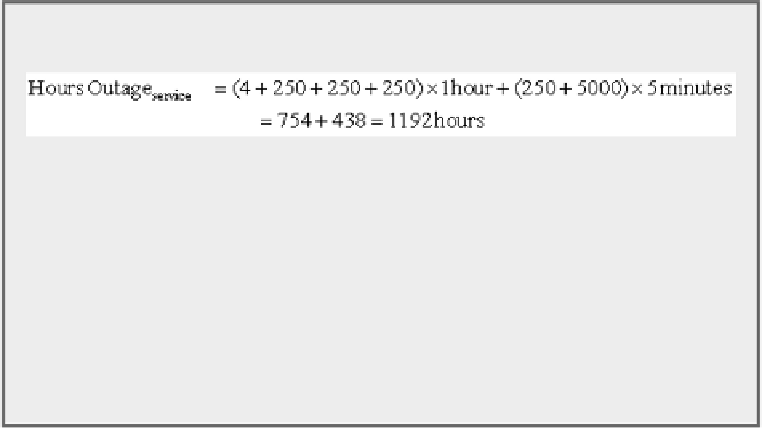

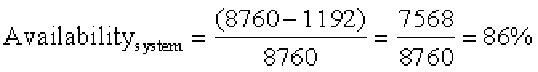

Since there are 365 × 24 or 8760 hours in a year, availability is:

That is, without software redundancy to mask the many outages, a service on

those 2400 servers would be down on average one day a week, or

zero

nines of

availability!

As

Section 6.10

explains, the forerunners of WSCs are

computer clusters

. Clusters are col-

lections of independent computers that are connected together using standard local area net-

works (LANs) and off-the-shelf switches. For workloads that did not require intensive com-

munication, clusters offered much more cost-effective computing than shared memory multi-

processors. (Shared memory multiprocessors were the forerunners of the multicore computers

then later for Internet services. One view of WSCs is that they are just the logical evolution

from clusters of hundreds of servers to tens of thousands of servers today.

A natural question is whether WSCs are similar to modern clusters for high- performance

computing. Although some have similar scale and cost—there are HPC designs with a million

processors that cost hundreds of millions of dollars—they generally have much faster pro-

cessors and much faster networks between the nodes than are found in WSCs because the

HPC applications are more interdependent and communicate more frequently (see

Section

don't get the cost benefits from using commodity chips. For example, the IBM Power 7 mi-

croprocessor alone can cost more and use more power than an entire server node in a Google

WSC. The programming environment also emphasizes thread-level parallelism or data-level

parallelism (see

Chapters 4

and

5

)

, typically emphasizing latency to complete a single task as

opposed to bandwidth to complete many independent tasks via request-level parallelism. The

HPC clusters also tend to have long-running jobs that keep the servers fully utilized, even for

weeks at a time, while the utilization of servers in WSCs ranges between 10% and 50% (see

Figure 6.3

on page 440) and varies every day.

How do WSCs compare to conventional datacenters? The operators of a conventional data-

center generally collect machines and third-party software from many parts of an organization

and run them centrally for others. Their main focus tends to be consolidation of the many ser-

vices onto fewer machines, which are isolated from each other to protect sensitive information.

Hence, virtual machines are increasingly important in datacenters. Unlike WSCs, convention-

al datacenters tend to have a great deal of hardware and software heterogeneity to serve their

Search WWH ::

Custom Search