Hardware Reference

In-Depth Information

Figures B.8

and

B.9

on pages B-24 and B-25 show the relative frequency of cache misses broken

down by the three Cs. As we will see in

Chapters 3

and

5

,

multithreading and multiple cores

add complications for caches, both increasing the potential for capacity misses as well as

adding a fourth C, for

coherency

misses due to cache flushes to keep multiple caches coherent

in a multiprocessor; we will consider these issues in

Chapter 5

.

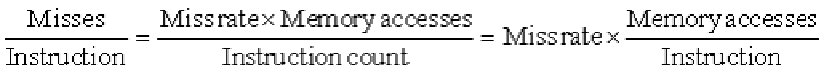

Alas, miss rate can be a misleading measure for several reasons. Hence, some designers

prefer measuring

misses per instruction

rather than misses per memory reference (miss rate).

These two are related:

(It is often reported as misses per 1000 instructions to use integers instead of fractions.)

The problem with both measures is that they don't factor in the cost of a miss. A beter meas-

ure is the

average memory access time

:

where

hit time

is the time to hit in the cache and

miss penalty

is the time to replace the block

from memory (that is, the cost of a miss). Average memory access time is still an indirect meas-

ure of performance; although it is a beter measure than miss rate, it is not a substitute for

execution time. In

Chapter 3

we will see that speculative processors may execute other instruc-

tions during a miss, thereby reducing the effective miss penalty. The use of multithreading

(introduced in

Chapter 3

) also allows a processor to tolerate missses without being forced to

idle. As we will examine shortly, to take advantage of such latency tolerating techniques we

need caches that can service requests while handling an outstanding miss.

If this material is new to you, or if this quick review moves too quickly, see

Appendix B

. It

covers the same introductory material in more depth and includes examples of caches from

real computers and quantitative evaluations of their efectiveness.

Section B.3

in Appendix B presents six basic cache optimizations, which we quickly review

here. The appendix also gives quantitative examples of the benefits of these optimizations. We

also comment briefly on the power implications of these trade-offs.1.

1.

Larger block size to reduce miss rate

—The simplest way to reduce the miss rate is to take ad-

vantage of spatial locality and increase the block size. Larger blocks reduce compulsory

misses, but they also increase the miss penalty. Because larger blocks lower the number

of tags, they can slightly reduce static power. Larger block sizes can also increase capacity

or conflict misses, especially in smaller caches. Choosing the right block size is a complex

trade-of that depends on the size of cache and the miss penalty.

2.

Bigger caches to reduce miss rate

—The obvious way to reduce capacity misses is to increase

cache capacity. Drawbacks include potentially longer hit time of the larger cache memory

and higher cost and power. Larger caches increase both static and dynamic power.

3.

Higher associativity to reduce miss rate

—Obviously, increasing associativity reduces conflict

misses. Greater associativity can come at the cost of increased hit time. As we will see

shortly, associativity also increases power consumption.

Search WWH ::

Custom Search