Image Processing Reference

In-Depth Information

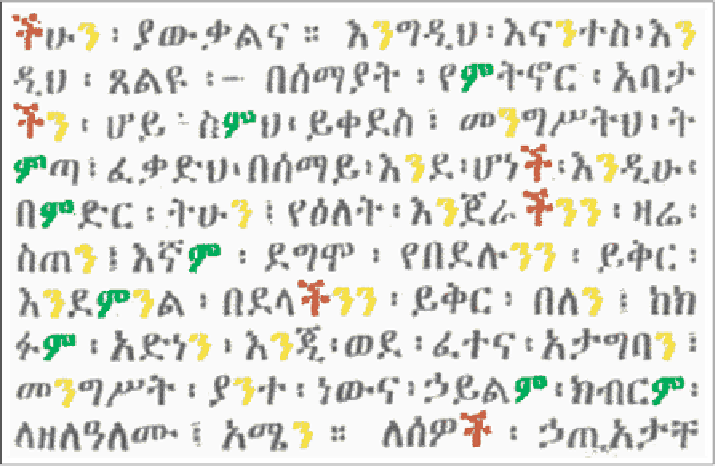

Fig. 11.10. Recognition of characters in Ethiopian text. The

colors

encode the labels of some

identified target characters

image constancy hypothesis severely. In other words, if the image gray tones repre-

senting the same pattern differ nonlinearly and significantly between the reference

and the test image, then a good precision or a convergence may not be achieved.

An early exception to the “rotate and correlate” approach is the pattern recog-

nition school initiated by Hu [112], who suggested the moment-invariant signatures

to be computed on the original image, which was assumed to be real-valued. Later,

Reddi [188] suggested the magnitudes of complex moments to efficiently implement

the moment invariants of the spatial image, mechanizing the derivation of them. The

complex moments contain the rotation angle information directly encoded in their

arguments as was shown in [25]. An advantage they offer is a simple separation of

the direction parameter from the model evidence, i.e., by taking the magnitudes of

the complex moments one obtains the moment invariants, which represent the evi-

dence. The linear rotation-invariant filters suggested by [51, 228] or the steerable fil-

ters [75,180] are similar to filters implementing complex moments. With appropriate

radial weighting functions, the rotation-invariant filters can be viewed as equivalent

to Reddi's complex moment filters, which in turn are equivalent to filters implement-

ing Hu's geometric invariants. From this viewpoint, the suggestions of [2, 199] are

also related to the computation of complex moments of a gray image, and hence de-

liver correlates of Hu's geometric invariants. Despite their demonstrated advantage

in the context of real images, it is, however, not a trivial matter to model

isocurve

families

embedded in gray images by correlating complex moment filters with gray