Biomedical Engineering Reference

In-Depth Information

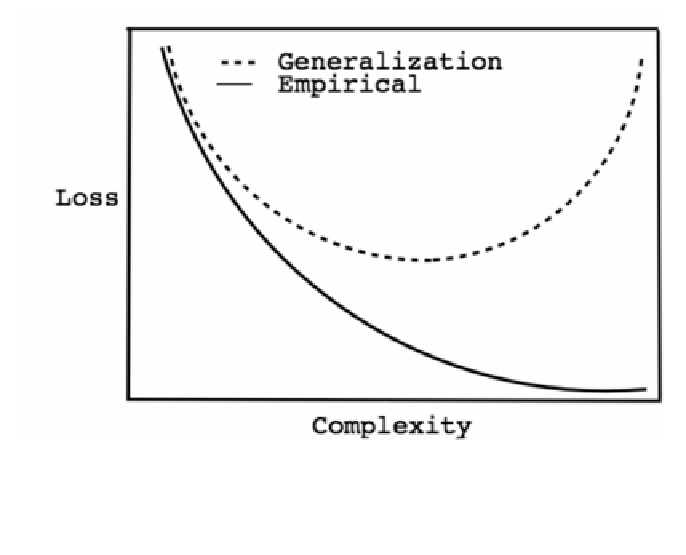

Figure 3

. Empirical loss and generalization loss as a function of model complexity.

methods penalize the "roughness" of a model, i.e., some measure of how much

the prediction shifts with a small change in either the input or the parameters

(26, ch. 10). A smooth function is less flexible, and so has less ability to match

meaningless wiggles in the data. Another popular penalty method, the

minimum

description length

principle of Rissanen, will be dealt with in §8.3 below.

Usually, regularization methods are justified by the idea that models can be

more or less complex, and more complex ones are more liable to over-fit, all

else being equal, so penalty terms should reflect complexity (Figure 3). There's

something to this idea, but the usual way of putting it does not really work; see

§2.3 below.

2.1.3.

Capacity Control

Empirical risk minimization, we said, is apt to over-fit because we do not

know the generalization errors, just the empirical errors. This would not be such

a problem if we could

guarantee

that the in-sample performance was close to

the out-of-sample performance. Even if the exact machine we got this way was

not particularly close to the optimal machine, we'd then be guaranteed that our

predictions

were nearly optimal. We do not even need to guarantee that

all

the