Information Technology Reference

In-Depth Information

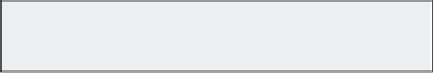

FIGURE 10.6

Big data testing—focus areas

Data presentation

(e.g. reports validation)

4

Data warehouse

(e.g. ETL process validation)

3

Process (job and task) validation

(e.g. MapReduce code, functional testing)

2

Raw data, pre-process validation

(e.g. validation of loading process)

1

1.

The first step is to verify preprocess validation, which involves loading the data into

a distributed data store. This involves quality checking (QC) the data for its correct-

ness, necessary format conversions, time-stamp verification, and so on.

2.

The second step starts when the data has been loaded to the data store and is ready for

processing. This includes functional testing of the data processing chain (code), such as

MapReduce programs and job execution.

3.

The third step is to test the processed data when extract, transform, and load (ETL)

operations are performed on it. This includes functional testing of ETL or data ware-

housing technologies used, such as Pig and Hive.

4.

The fourth and final step is to validate and verify the results and reports produced as

a result of the ETL processes. This is done using procedures, such as queries, to verify

whether required relevant data is being extracted. Moreover, it is necessary to check if

the resultant data set is properly aggregated and grouped.

Creating and Deploying Cloud Services

In the following sections we focus on using hands-on techniques to create and deploy cloud

services. We explore two options: using the Windows Azure platform and using Amazon EC2.

Search WWH ::

Custom Search