Game Development Reference

In-Depth Information

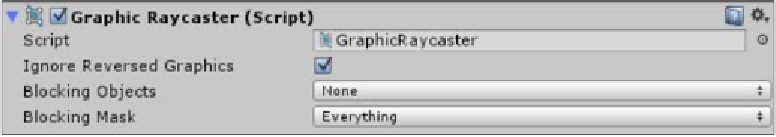

By default,

Unity UI

adds a

Graphic Raycaster

(a new graphics-based raycasting

component) to a

Canvas

that provides a robust and performant raycasting system

for the graphical canvas (as highlighted earlier), this provides user interaction with

graphical elements of the UI system.

Unity also provides some other

out-of-the-box

raycasting components, including:

•

Physics Raycaster

: This performs raycast tests on 3D models and 3D

collidable objects, such as models/meshes

•

Physics 2D Raycaster

: This is the same as the Physics raycaster but limited to

2D sprites and 2D collidable objects

•

Base Raycaster

: This is a high-level base implementation to create your own

raycast systems

All of these systems rely on an

Event Camera

, which will be used as the source

for all raycasts and can be configured to any camera in your scene—which camera

will depend on your needs.

Input modules

The other input to the Event system is the hardware interaction for touch, mouse,

and keyboard inputs. Unity has finally delivered a framework in which these are

now abstracted and (more importantly) implemented in a more consistent manner.

For other inputs, you will have to build them yourself or keep an eye

on the community for new possibilities.

With this new abstraction, it would be relatively easy to drop in an

input module for a Gamepad, Wiimote, or even the Kinect sensor or

Leap controller.

Once built, you simply attach it to the Unity

EventSystem

for the

scene and off you go.