Database Reference

In-Depth Information

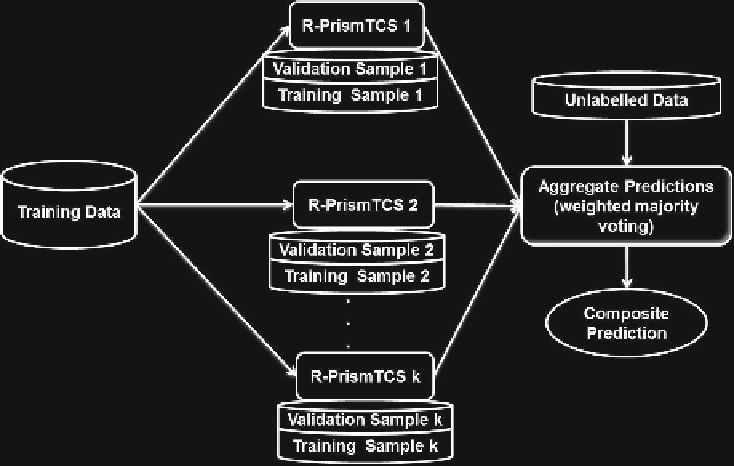

Fig. 1.

The architecture of the Random Prism ensemble classifier.

Prism's classification accuracy is superior to that of RrismTCS's. Furthermore

results published recently in [

20

], show that Random Prism's potential unfolds

when there is noise in the training as well as in the test data. Here Random

Prism clearly outperforms PrismTCS [

20

].

However, this paper is more concerned with the scalability of Random Prism

to large datasets. One would expect that the runtime of Random Prism inducing

100 base classifiers is approximately 100 times longer, compared with PrismTCS,

as Random Prism induces base classifiers with a bag of size

N

for each base clas-

sifier, where

is the total number of training instances. Yet, this is not the case

according to the results published in [

20

]. The reason for this is the random com-

ponent in R-PrismTCS, which only considers a random subset of the total feature

space for the induction of each rule term. Thus the workload of each R-PrismTCS

classifier for evaluating candidate features for rule term generation is reduced by

the number of features not considered for each induced rule term.

N

3 The Parallel Random Prism Classifier

This section addresses our proposal to scale up Random Prism ensemble learner

by introducing a parallel version of the algorithm. This will help to address the

increased CPU time requirements, and also the increased memory requirements.

The increased memory requirements are due to the fact that there are

k

data

samples of size

N

required if

k

is the number of R-PrismTCS classifiers and

N

the number of total instances in the original training data. If

k

is 100, then

Search WWH ::

Custom Search