Information Technology Reference

In-Depth Information

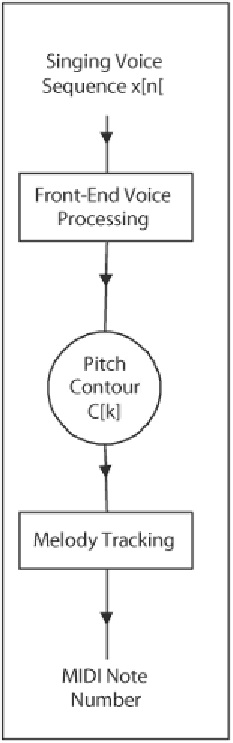

Figure 22. Illustrates the flow of such pitch

converts a pitch contour of singing voice to a

sequence of music notes. The pitch of singing

voice is usually much more unstable than that of

musical instruments.

Furthermore, by adding on the transcription

process, a heuristic music grammar constraints

based on music theory, the error rate can be re-

duced to the lowest.

Beat/tempo tracking

In this section we will describe beat and tempo

tracking contest; to define these contests we want

to cite Simon Dixon (2001):

The task of beat tracking or tempo following is

perhaps best described by analogy to the human

activities of foot-tapping or hand-clapping in time

with music, tasks of which average human listeners

are capable. Despite its apparent intuitiveness and

simplicity compared to the rest of music percep-

tion, beat tracking has remained a difficult task

to define, and still more difficult to implement in

an algorithm or computer program.

These algorithms should be able to estimate the

tempo and the times of musical beats in expres-

sively performed music. The input data may be

either digital audio or a symbolic representation

of music such as MIDI (Zoia, 2004).

This kind of program finds application in tasks

like beat-driven real-time computer graphics,

computer accompaniment of a human performer,

lighting control, and many others. Tempo and

beat tracking are directly implemented in MIR

systems, in fact every song has a distinctive beat

and metronome BPM.

It should be noted that these tasks are not re-

stricted to music with drums; in fact the human

ear can identify beats and tempo even if the song

does not have a strong rhythmical accentuation.

Obviously these tasks are more difficult in music

without drums. The algorithms that implement

tempo and beat tracking actually have less ac-

curacy in music without drums.

include power spectrum analysis, peak grouping,

and harmonic energy estimation.

Wang, Lyu, and Chiang (2003) describe a sing-

ing transcription system, which could he divided

into two modules. One is for the front-end voicing

processing, including voice acquisition, end-point

detection, and pitch tracking, which deal with the

raw singing signal and convert it to a pitch con-

tour. The other is for the melody tracking, which

maps the relatively variation pitch level of human

singing into accurate music notes, represented

as MIDI note number. The overall system block

diagram can be shown as Figure 22.

The melody tracker is based on Adaptive

Round Semitones (ARS) algorithm, which

Search WWH ::

Custom Search