Information Technology Reference

In-Depth Information

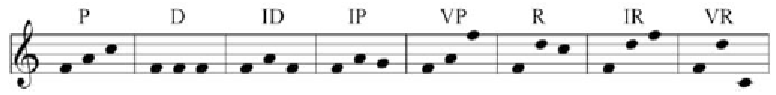

Figure 5. Prototypical Narmour structures

Figure 6. Narmour analysis of All of Me

tify a musician by his or her playing style is, how

is this task performed by a music expert? In the

case of Jazz saxophonists we believe that most of

the cues for interpreter identification come from

the timbre or “quality” of the notes performed

by the saxophonist. That is to say, while timing

information is certainly important and is useful

to identify a particular musician most of the in-

formation relevant for identifying an interpreter is

the timbre characteristics of the performed notes.

In this respect, the saxophone is similar to the

singing voice in which most of the information

relevant for identifying a singer is simply his or

her voice's timbre. Thus, the algorithm to identify

interpreters from their playing style reported in

this chapter aims to detect patterns of notes based

on their timbre content. Roughly, the algorithm

consists of generating a performance alphabet by

clustering similar (in terms of timbre) individual

notes, inducing for each interpreter a classifier

which maps a note and its musical context to a

symbol in the performance alphabet (i.e., a clus-

ter), and given an audio fragment identify the

interpreter as the one whose classifier predicts

best the performed fragment. We are ultimately

interested in obtaining a classifier

MC

mapping

melody fragments to particular performers (i.e.,

the identified saxophonist). We initially segment

all the recorded pieces into audio segments

representing musical phrases. Given an audio

fragment denoted by a list of notes [

N

1

,…,N

m

] and

a set of possible interpreters denoted by a list of

performers [

P

1

,…,P

n

], classifier

MC

identifies the

interpreter as follows:

MC

([

N

1

,…,N

m

], [

P

1

,…,P

n

])

for each interpreter

P

i

Score

i

= 0

for each note

N

k

PN

k

= perceptual_features(

N

k

)

C

N

k

= contextual_features(

N

k

)

(

X

1

,…,X

q

) = cluster_membership(

PN

k

)

for each interpreter

P

i

Cluster(i,k)

=CL

i

(

CN

k

)

Score

i

=

Score

i

+ X

Cluster(i,k)

return

P

M

such that Score

M

= max(

Score

1

,…

,Score

n

)

This is, for each note in the melody fragment

the classifier

MC

computes the set of its percep-

tual features, the set of its contextual features

and, based on the note's perceptual features, the

cluster membership of the note for each of the

clusters (

X

1

,…,X

q

are the cluster membership for

clusters

1,…,q

, respectively). Once this is done, for

each interpreter

P

i

its trained classifier

CL

i

(

PN

)

predicts a cluster representing the expected type

of note the interpreter would have played in that

musical context. This prediction is based on the

note's contextual features. The score

Score

i

for

each interpreter

i

is updated by taking into ac-

count the cluster membership of the predicted

cluster (i.e., the greater the cluster membership

Search WWH ::

Custom Search