Information Technology Reference

In-Depth Information

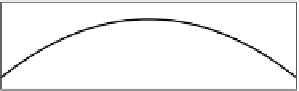

−8.22

−4.82

H

S

H

S

w

0

/w

1

w

0

/w

1

−4.92

−8.23

1.1

1.5

0.4

0.5

−7.604

−11.34

H

S

H

S

w

0

/w

1

w

0

/w

1

−11.44

−7.614

1.3

1.5

0.4

0.5

0.6

(a)

(b)

Fig. 3.14

H

S

(

E

)

of the univariate

tanh

perceptron as a function of

w

0

/w

1

:a)

(

μ

1

,σ

1

)=(3

,

1)

with

w

1

=3

at the top and

w

1

=7

at the bottom; b)

(

μ

1

,σ

1

)=

(1

,

1)

with

w

1

=10

at the top and

w

1

=15

at the bottom.

Using the Nelder-Mead minimization algorithm [165, 132] it is possible to

find the

H

S

-MEE solution [

w

1

0

w

0

]

T

11

.

87]

T

, corresponding

to the vertical line

x

1

=2

.

5, the optimal solution [219]. Fig. 3.15a shows

H

S

(represented by dot size) for a grid around the MEE solution: the central dot

with minimum size. The Nelder-Mead algorithm was unable to find the opti-

mal solution for the close-classes case. The reason is illustrated in Figs. 3.15b

and 3.15c using a grid around the candidate solution. We encounter a mini-

mum along

w

1

and

w

2

, and a maximum along

w

0

. This means that, for close

classes, if one only allows rotations of the optimal line the best MEE solution

is in fact the vertical line. Note that by rotating the optimal line one is in-

creasing the “disorder of the errors”, with increased variance of the

f

E|t

and

increased errors for

both

classes; by Property 2 of Sect. 2.3.4 it is clear that

the vertical line is an entropy minimizer. On the other hand, by shifting the

vertical line along

w

0

one finds a maximum because, when moving away of

w

0

one is increasingly assigning part of the errors to one of the classes and

decreasing them for the other class; one then obtains the effect described by

Property 1 of Sect. 2.3.4 and also seen in Example 3.2. We know already how

empirical entropy solves this effect: using the fat estimate

f

fat

(

e

).

If empirical entropy is considered, all Gaussian-input problems can be

solved with the MEE approach (with either

H

S

or

H

R

2

), be they univariate

or multivariate and with distant or close classes. The error rates obtained for

suciently large

n

are very close to the theoretical ones (details provided in

[219]). Examples for bivariate classes were already presented in the preceding

section. Figure 3.16 shows the evolution of the weights for the

μ

1

=[1 0]

T

close-classes case, in an experiment where the perceptron was trained with

h

=1in

n

= 500 instances and tested in 5000 instances. The final solution

is

w

=[0

.

54 0

.

05

=[4

.

75 0

−

0

.

23]

T

corresponding to

P

ed

=0

.

2880 and

P

et

=0

.

3119

(we mentioned before that min

P

e

=0

.

3085).

−