Information Technology Reference

In-Depth Information

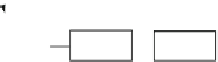

3.3 The Continuous-Output Perceptron

The continuous-output perceptron (also called

neuron

) is a simple classifier

represented diagrammatically in Fig. 3.7.

1

x

i

1

w

0

w

1

w

2

x

i

2

Σ

ϕ

(

·

)

θ

(

·

)

θ

(

y

i

)

w

d

y

i

x

id

Learning

Algorithm

t

i

Fig. 3.7

Perceptron diagram (shaded gray) with learning algorithm.

The device implements the following classifier function family:

Z

W

=

θ

(

ϕ

(

w

T

x

+

w

0

));

w

∈

d

,w

0

∈

R

,

W

⊂

R

(3.40)

where

w

and

w

0

are the classifier parameters known in perceptron terminol-

ogy as

weights

and

bias

,

ϕ

(

.

) is a continuous

activation function

,and

θ

(

) the

usual classifier thresholding function yielding class codes. In the original pro-

posal by Rosenblatt [190] the perceptron didn't have a continuous activation

function.

Note that

·

Z

W

is suciently rich to comprehend the function families of

linear discriminants and data splitters.

The activation function

ϕ

(

) is usually some type of squashing function, i.e.,

a continuous differentiable function such that

ϕ

(

x

)

·

[

a, b

]

,

lim

x→−∞

ϕ

(

x

)

=

a

and lim

x→

+

∞

ϕ

(

x

)=

b

. We are namely interested in strict monotonically

increasing squashing functions, popularly known as

sigmoidal

(S-shaped)

functions, such as the hyperbolic tangent,

y

= tanh(

x

)=(

e

x

∈

e

−x

)

/

(

e

x

+

e

−x

)

or the logistic sigmoid

y

=1

/

(1 +

e

−x

). These are the ones that have been

almost exclusively used, but there is no reason not to use other functions,

like for instance the trigonometric arc-tangent function

y

= atan(

x

).

The perceptron implements a linear decision border in

d

-dimensional space

defined by

ϕ

(

w

T

x

+

w

0

)=

a

(

a

=0for the tanh(

−

·

) sigmoid with

T

=

{−

1

,

1

}

,

and

a

=0

.

5 for the logistic sigmoid with

T

=

{

0

,

1

}

). For instance, for