Information Technology Reference

In-Depth Information

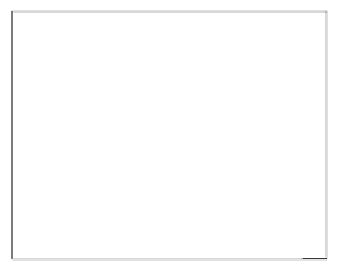

4

4

f(e)

f(e)

3

3

2

2

1

1

e

e

0

0

−4

−2

0

2

4

−4

−2

0

2

4

(a)

(b)

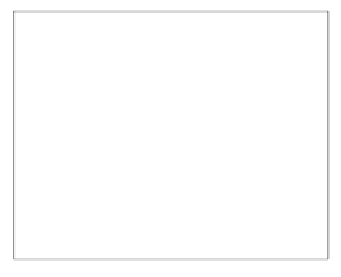

4

4

f(e)

f(e)

3

3

2

2

1

1

e

e

0

0

−4

−2

0

2

4

−4

−2

0

2

4

(c)

(d)

Fig. 3.2 An error PDF a) converging to two Dirac-

δ

functions by gradient ascent

of the information potential with

η

=0

.

01

; b), c) and d), show the error function

at iterations 14, 16, and 17, respectively.

(But not before convergence has been achieved, since the supports of the

Gaussians are not disjoint.)

We know that the error PDF parameters are directly related to classifier pa-

rameters; we may then expect that the choice of initial values in the parameter

space of the classifier may drive the learning algorithm towards distinct the-

oretical MEE solutions. Example 3.1 also suggests that a large

σ

initial

tends

to drive the MEE solution to the desired min

P

e

value. We make use of this

suggestion in the following example, where we also use the following result:

Proposition 3.1.

The convolution of two Gaussian functions is a Gaussian

function whose mean is the sum of the means and whose variance is the sum

of the variances:

g

(

x

;

μ

2

,σ

2

)=

g

(

x

;

μ

1

+

μ

2

,

σ

1

+

σ

2

)

.

g

(

x

;

μ

1

,σ

1

)

⊗

(3.20)

For a proof, see e.g. [15].