Information Technology Reference

In-Depth Information

performance [41, 227]. This way of thinking has been shown in [189] to be

incorrect for the unbounded codomain loss functions used by support vector

machines. The authors have shown that the choice of loss function influences

the convergence rate of the empirical risk towards the true risk, as well as

the upper bound on

R

L

(

Y

w

+

)

R

L

(

Y

w

+

),where

Y

w

+

is the minimizer of the

−

empirical risk

R

L

.

Our interest in what regards classification systems is the probability of

error. We first note that minimization of the risk does not imply minimization

of the probability of error [89, 160] as we illustrate in the following example.

Example 2.6.

Let us assume a two-class problem and a classifier with two

outputs, one for each class:

y

0

,y

1

. Assuming

T

=

and

Y

0

,Y

1

taking

value in [0

,

1], the squared error loss for class

ω

0

instances (

t

0

=1

,t

1

=0)

can be written as

{

0

,

1

}

y

0

)

2

+(

t

1

−

y

1

)

2

=(1

y

0

)

2

+

y

1

.

L

MSE

([

t

0

t

1

]

,

[

y

0

y

1

]) = (

t

0

−

−

(2.48)

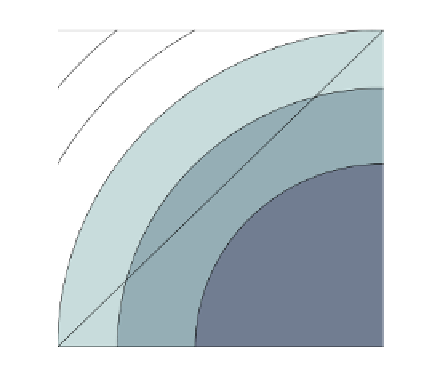

The equal-

L

MSE

contours for class

ω

0

instances are shown in Fig. 2.9. Let

us suppose that the classifier labels the objects in such a way that an object

x

is assigned to class

ω

0

if

y

0

(

x

)

>y

1

(

x

) and to class

ω

1

otherwise. Now

consider two

ω

0

instances

x

1

and

x

2

with outputs as shown in the Fig. 2.9.

We see that the correctly classified

x

2

(

y

0

(

x

2

)

>y

1

(

x

2

)) has higher

L

MSE

than the misclassified

x

1

. For this reason,

L

MSE

is said to be non-monotonic

in the continuum connecting the best classified case (

y

0

=1

,y

1

=0)and

worst classified case (

y

0

=0

,y

1

=1)[89, 160]. A similar result can be

obtained for

L

CE

. This means that it is possible, at least in theory, to train

a classifier that minimizes

L

MSE

and

L

CE

without minimizing the number

of misclassifications [32].

1

y

1

y(x

2

)

y(x

1

)

0.5

y

0

0

0

0.5

1

Fig. 2.9

Contours of equal-

L

MSE

for

ω

0

instances. Darker tones correspond to

smaller values.