Information Technology Reference

In-Depth Information

6

L(e)

4

2

0

e

−2

0

0.5

1

1.5

2

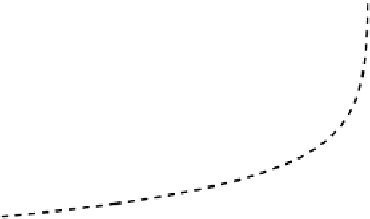

Fig. 2.4

L

MSE

(solid line) and

L

CE

(

for

t

=1)

(dashed line) as functions of

e

.

Thus, for cross-entropy we have a logarithmic loss function,

L

CE

(

t, e

)=

−

te

). Figure 2.4 shows (for

t

=1)the distance functions

L

MSE

(

e

)=

e

2

and

L

CE

(

t, e

)=

ln(2

−

te

). A classifier minimizing the MSE risk functional

is minimizing the second-order moment of the errors, favoring input-output

mappings with low error spread (low variance) and deviation from zero. A

classifier minimizing the CE risk functional is minimizing an average loga-

rithmic distance of the error from its worst value (respectively, 2 for

t

=1

and

−

ln(2

−

1), as shown in Fig. 2.4. As a consequence of the logarithmic

behavior

L

CE

(

t, e

) tends to focus mainly on large errors. Note that one may

have in some cases to restrict

Y

to the open interval ]

−

2 for

t

=

−

−

1

,

1[ in order to satisfy

the integrability condition of (2.31).

Example 2.3.

Let us consider a two-class problem with target set

T

=

{

,P

(0) =

P

(1) = 1

/

2 and a classifier codomain restricted to [0

,

1] ac-

cording to the following family of uniform PDFs:

f

Y

(

y

)=

u

(

y

;0

,d

)

0

,

1

}

if

T

=0

.

(2.32)

u

(

y

;1

−

d,

1) if

T

=1

1

2

u

(

e

;0

,d

)+

2

u

(

e

;

Note that according to (2.22)

f

E

(

e

) is distributed as

−

d,

0) =

u

(

e

;

−

d, d

). Therefore, the MSE risk is simply the variance of this

distribution:

R

MSE

(

d

)=

d

2

3

.

(2.33)

We now compute the cross-entropy risk, which is in this case easier to derive

from

f

Y |t

. First note that (2.28) is

n

times the empirical estimate of

R

CE

(

d

)=

−

P

(0)

E

[ln(1

−

Y

)

|

T

=0]

−

P

(1)

E

[ln(

Y

)

|

T

=1]

.

(2.34)