Information Technology Reference

In-Depth Information

Classifier

z

w

Data population

X × T

x

i

w

i.i.d. sampler

z

i

=

z

w

(

x

i

)

Dataset

Learning

algorithm

(

X

ds

,T

ds

)

t

i

=

t

(

x

i

)

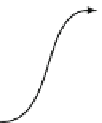

Fig. 1.1 A classification system implementing

z

w

with

w

supplied by a learning

algorithm.

induction task by picking a

general

classification rule

z

w

basedonsome

partic-

ular

training set (

X

ds

,T

ds

). It accomplishes the induction task by reflecting

through

w

the matching (or mismatching) rate between classifier

outputs

,

z

i

=

z

(

x

i

),and

targets

,

t

i

=

t

(

x

i

), for all instances of (

X

ds

,T

ds

). The earlier

mentioned assumption of the training set being obtained by i.i.d. sampling is

easily understandable: any violation of this basic assumption will necessarily

bias the inductive learning process.

Let us assume that the training algorithm produced

w

and, therefore, the

classifier implements

z

w

. The probability of error (

P

e

) of this classifier is:

P

e

(

Z

w

)=

E

X,T

[

t

(

X

)

=

z

w

(

X

)]

,

(1.1)

which can also be expressed as

P

e

(

Z

w

)=

E

X,T

[

L

(

t

(

X

)

,z

w

(

X

))] =

X×T

L

(

t

(

x

)

,z

w

(

x

))

dF

X,T

(

x, t

)

(1.2)

with

0

,

(

x

)=

z

w

(

x

)

1

,

(

x

)

L

(

t

(

x

)

,z

w

(

x

)) =

.

(1.3)

=

z

w

(

x

)

Formula (1.2) expresses

P

e

as the expected value in

X

T

of the function

L

,

called

loss

function (also called cost or error function). For a given

t

(

X

) the

probability of error depends on the classifier output r.v.

Z

w

=

z

w

(

X

).The

expectation is computed with the cumulative distribution function

F

X,T

(

x, t

)

of the joint distribution of the random variables

X

and

T

representing the

classifier inputs and the targets. The above loss function is simply an indicator

function of the misclassified instances:

L

(

×

).

There are variations around the probability of error formulation, related

to the possibility of weighing differently the errors according to the class

membership of the instances. We will not pay attention to these variations.

Similar formulations of the learning task can be presented if one is dealing

with regression or PDF estimation problems. In other words, one can present

a general formulation that encompasses all three learning problems. In this

·

)=

(

·

{t

=

z

w

}