Information Technology Reference

In-Depth Information

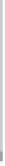

15

c=2

10

h

5

0

0

50

100

150

200

250

300

350

400

450

500

15

c=3

10

h

5

0

0

50

100

150

200

250

300

350

400

450

500

15

c=4

10

h

5

0

0

50

100

150

200

250

300

350

400

450

500

n

Fig. 6.6 Value of

h

opt

(dashed line) and

h

fat

(solid line) for

c

=2,3,and4.

(Marked instances refer to experiments with datasets summarized in Table 6.2; see

text.)

Figure 6.6 shows the

h

values obtained in these experiments (Table 6.2),

with open circles for the "Best

h

" and crosses for the "Suggested

h

". The

h

fat

values proposed by formula (6.8) closely follow these values.

One could think of making

h

an updated variable along the training pro-

cess, namely proportional to the error variance. This is suggested by the fact

that, as one approaches the optimal solution, the errors tend to concentrate

making sense to decrease

h

for the corresponding smaller data range. How-

ever, it turns out to be a tricky task to devise an empirical updating formula

which does not lead to instability in the vicinity of the minimum error, given

the wild variations that a small

h

value originates in the gradient calculations.

Figure 6.7 shows an MLP training error curve for the PB12 dataset [110] us-

ing an updated

h

, which is simply proportional to the error variance. Also

shown is the value of

h

itself. Near 100 epochs and with training error 10%

the algorithm becomes unstable loosing the capability to converge.